Artificial Intelligence (AI) is revolutionizing the world of storage and data management at an unprecedented pace. This emerging technology is not only streamlining the way organizations store, process, and manage enormous volumes of data, but it’s also significantly improving the insights gleaned from this information. Offering advanced algorithms and machine learning capabilities, AI is powering the future of storage and data management—and is expected to transform the way businesses handle and use their data.

Here are six ways AI is changing the game:

- Improved data analysis: By identifying intricate patterns and trends in large datasets, AI improves the accuracy and speed of data analysis, leading to quicker and more precise insights. With this higher caliber of insights, you can make quick, well-informed decisions that align with your business goals and support profitability and growth.

- Predictive maintenance: With the ability to analyze historical data and real-time usage patterns, AI algorithms can be used to predict when equipment failures are likely to occur and proactively flag them to the right people, mitigating the risk of expensive downtime and devastating data loss.

- Intelligent data storage: AI can analyze data usage patterns and automatically move less frequently accessed data to more cost-effective storage tiers. It also makes it easy for you to quickly locate specific data by indexing and tagging information for easy search and retrieval. With critical data that’s readily available and accessible when you need it, AI technology powers more efficient operations, better resource allocation, and more informed decisions.

- Better data security: With the ability to identify potential threats and anomalies in data usage patterns, AI technology allows you to take immediate action to prevent major security breaches. Additionally, using AI-driven encryption methods, you can ensure your sensitive data is protected both at rest and in transit, making it infinitely more difficult for hackers to access or steal important information.

- Automated routine tasks: With the ability to automate routine data management tasks, AI can streamline workflows, minimize processing time, reduce human error, and enhance the overall efficiency of data management processes, ultimately leading to cost savings and increased productivity.

- Enhanced disaster recovery: AI not only automates backup and recovery processes, but it can also identify potential risks and vulnerabilities and deliver real-time alerts and notifications in the event of an outage or data loss. With a higher degree of control and reliability in your disaster recovery efforts, your business can recover from disasters more quickly to reduce the impact of unexpected events, improve business continuity, and ensure critical operations can be resumed quickly.

Harnessing the Potential of AI-Driven Storage

As organizations generate and store ever-increasing amounts of data, AI will continue to play a crucial role in helping businesses manage and utilize that data to drive innovation, optimize operations, and make data-driven decisions that fuel growth.

Hewlett Packard Enterprise (HPE), with solutions like HPE InfoSight, is leading the charge in harnessing the potential of AI-driven storage and data management technologies. At Zunesis, we are committed to helping you capitalize on HPE’s AI-powered solutions to ensure a seamless, optimized, and forward-thinking data management strategy tailored to your specific needs and objectives.

For more information about how to transform your storage and data management processes with HPE powered by AI, contact us here.

Do your Linux servers use LVM?

If not, you should strongly consider it. Unless, you are using ZFS, BTRFS, or other “controversial” filesystems. ZFS and BTRFS are outside of scope for this discussion but are definitely worth reviewing if you haven’t heard of them and are running Linux in your environment.

Logical Volume Manager for Linux is a proven storage technology created in 1998. It offers layers of abstraction between your storage devices in your Linux system and the filesystems that live on them. Why would you want to add an extra layer between your servers and their storage you might ask?

Here are some reasons:

- Flexibility

- You can add more physical storage devices if needed, and present them as a single filesystem.

-

Online maintenance – Need to grow or shrink your filesystems, online, and in real-time? This is possible with LVM.

-

It is possible to live migrate your data to new storage.

- Thin provisioning can be done, which can allow you to over-commit your storage if you really want to.

- Device naming – you can name your devices something that makes sense instead of whatever name Linux gives the device.

- Meaningful device names like Data, App, or DB are easier to understand than SDA, SDB, SDC

- This also has the benefit of reducing mistakes when working with block devices directly.

- Performance – it is possible to stripe your disks and improve performance.

- Redundancy – it is also possible to add fault tolerance to ensure data availability.

- Snapshots

- This is one of my favorite reasons for using LVM.

- You can take point-in-time snapshots of your system

- Those snapshots can then be copied off somewhere else.

- It is also possible to mount the snapshots and manipulate the data more granularly.

- Want to do something risky on your system, and if it doesn’t work out, have a quick rollback path? LVM is perfect for this.

So how does it work?

According to Red Hat :

“Logical Volume Management (LVM) presents a simple logical view of underlying physical storage space, such as hard drives or LUNs. Partitions on physical storage are represented as physical volumes that can be grouped together into volume groups. Each volume group can be divided into multiple logical volumes, each of which is analogous to a standard disk partition. Therefore, LVM logical volumes function as partitions that can span multiple physical disks.”

I think LVM is much easier to understand with a diagram. The above image illustrates some of the concepts involved with LVM. Physical storage devices recognized by the system can be presented as PVs (Physical Volumes). These PVs can either be the entire raw disk, or partitions, as illustrated above. A VG (Volume Group) is composed of one or more PVs. This is a storage pool, and it is possible to expand it by adding more PVs. It is even possible to mix and match storage technologies within a VG. The VG can then allocate LVs (Logical Volumes) from the pool of storage, which is seen as raw devices. These devices would then get formatted with the file system of your choice. They can grow or shrink as needed, so long as space is available in either direction for the operation.

You really should be using LVM on your Linux servers.

Without LVM, many of these operations discussed above are typically offline, risky, and painful. These all amount to downtime, which we in IT like to avoid. While some may argue that the additional abstractions add unnecessary complexity, I would argue that LVM really isn’t that complicated once you get to know it. The value of using LVM greatly outweighs the complexity in my opinion.

The value proposition is even greater when using LVM on physical Linux nodes using local storage. SAN storage and virtual environments in hypervisors typically have snapshot capabilities built-in, but even those do not offer all of the benefits of LVM. It also offers another layer of protection in those instances. Alternatively, the aforementioned ZFS and BTRFS are possible alternatives, and arguably better choices depending on who you ask. However, due to the licensing (ZFS) and potential stability (BTRFS) issues, careful consideration is needed with those technologies. Perhaps those considerations are topics for a future blog…

Want to learn more? Please reach out, we’re here to help.

Microsoft OneDrive

Microsoft OneDrive has become one of the most useful tools in the Office 365 suite. It is being used by more companies every day. Between the robust feature set and the constant updates, it is easily on par with other cloud storage solutions. It is offered as part of every Office 365 plan. So for any Office 365 user, there is no reason not to use it.

Originally rolled out under the name SkyDrive in 2007, legal issues led Microsoft to settle for the name OneDrive instead. Just like many other MS products, two version of OneDrive are offered, a consumer edition and an enterprise edition. Both versions are very similar but have some key differences. The enterprise edition is much better for businesses. The most important difference is the ability to centrally manage the entire organization’s OneDrive.

Over the years since its release, Microsoft has been constantly adding new features.

So what do people like most about OneDrive?

Local Sync folder

When OneDrive is installed, it creates a OneDrive folder on your computer. This folder acts like a regular file folder. It looks just like any folder you would find in your favorites bar, like the documents or downloads folders. This folder also syncs all of its contents to OneDrive’s cloud storage. So not only is it easy to use, it is also accessible from any computer. You just need to log in.

With 1TB free with all Office 365 subscriptions (5GB free for regular users), most users will be able to fit most, if not all, of their files in the singular folder. OneDrive also supports multiple folders within the OneDrive directory. This allows you to keep everything organized the way you like it.

Files on demand

To keep local storage usage low, files are moved up into OneDrive until you use them. Files are not automatically kept on your local drive unless you specifically choose a file or folder to “always keep on this device”. You can still see and browse to all of your files. They download immediately when opened.

You can switch between keeping files on your local drive and keeping them in OneDrive just by right clicking the file or folder and choosing the correct option. No need to worry about needing files when you are offline, as long as you are prepared.

Sync existing folders

A recent addition to OneDrive is the ability to sync folders other than the OneDrive folder. The most useful folders being the my documents and pictures folders. This is an easy way to backup valuable pictures and documents that don’t necessarily fit into your organization of the OneDrive folder.

You can even sync these folders between computers. I use it to sync my desktop backgrounds across all computers. If I find an awesome picture that would make a great background, I just save it into my backgrounds folder. It syncs to OneDrive and automatically adds to my desktop background slideshow on both my work computer and personal computer.

Sharing files

In both editions of OneDrive, sharing files is as easy as right clicking a file and clicking sharing. There are multiple options when sharing. This includes a read-only version or an editable version, a password protected version, and a version that is only usable by a single person. You can set all sorts of permissions, especially in the enterprise version.

Access controls are immensely important in the business world when sharing sensitive information. Even better, these access controls can be controlled by an admin. This gives businesses more control over who sees your data and how.

Creating shared folders

One of the easiest ways to share files is to create a shared folder that multiple people can access. Whether you want to create a folder for a single team, a whole department, or even the whole company, the process is fairly easy. One creates the folder and adds the correct names to the list of users. All users will have access to the folder and all files within, with varying levels of permissions. You may want some users to only have read access, while others get write access.

Linked content

OneDrive gives you the ability to create a link to a file and send that to someone else to access it. This is particularly useful when trying to email files to someone else, especially someone outside of your organization. Not only is this convenient, it adds another layer of security to emailing files. It saves space in everyone mailbox by eliminating attaching large files.

OneDrive has come a long way in the last few years. Once it was overshadowed by other cloud storage services like Dropbox or Box. Now with its integration with Office 365 and robust security features, it is easily one of the leaders in the space. It is clear that this is a core application in the Microsoft Office suite. I think you will find its an extremely useful tool in the business world.

Looking to migrate to Office 365 and see the advantages of OneDrive? Contact Zunesis for more information.

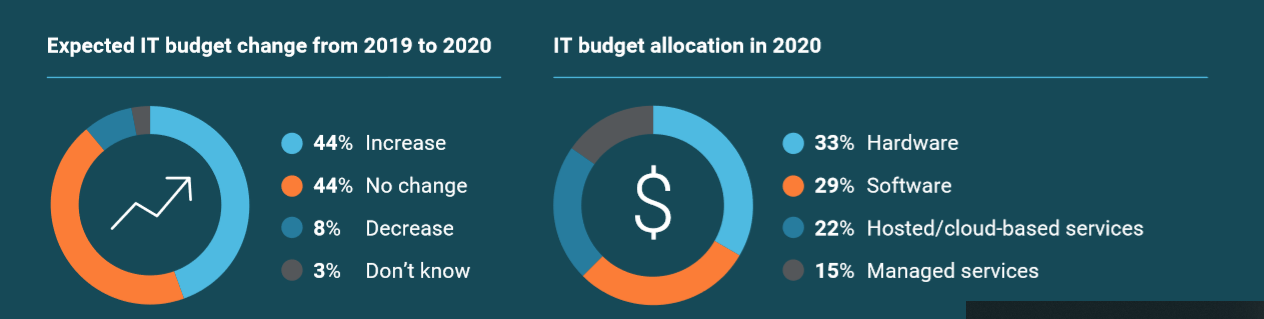

2020 IT Budget Forecast

Spiceworks recently released their 2020 Spiceworks State of IT Report. They surveyed more than 1,000 technology buyers in companies across North America and Europe. The study focused on how organizations will be spending their technology budgets for next year.

According to their report, replacing outdated infrastructure will be the biggest IT spend next year. Most businesses are anticipating top-line revenue growth. As revenue grows, typically IT budgets increase as well.

What will be the key areas of focus in organizations’ 2020 IT Budget Forecast?

Other key findings include:

- IT BUDGET GROWTH : IT budgets rise as businesses replace outdated technology. 44% of businesses plan to increase tech spend in 2020 which is up from 38% in 2019.

- BUDGET DRIVERS: One in four enterprises (1,000+ employees) are increasing 2020 IT spend due to a recent security incident.

- EMERGING TECH TRENDS: Business adoption of AI-powered technologies is expected to triple by 2021, while adoption of edge computing is expected to double. Large enterprises are adopting emerging technologies 5 times faster than small businesses.

- FUTURE TECH IN THE WORKPLACE: Two-thirds of large enterprises (5,000+ employees) plan to deploy 5G technology by 2021.

88% of businesses expect IT budgets to either grow or stay steady over the next 12 months. Compared to 2019, we’re seeing more upward acceleration.

44% of businesses plan to grow IT budgets in 2020. This is an increase from 38% in 2019. Organizations that expect IT budget growth next year anticipate an 18% increase on average. Only 8% of companies expect IT budgets to decline in 2020.

Key Categories for IT Spend

These categories represent more than half of the total IT Spend in businesses today.

- Security: 7% of Total IT Budget Spend. Pain points include identifying the right solution for their needs and comparing multiple solutions.

- Collaboration and Communication: 6% of Total IT Spend. Paint points include finding the right solution and quantifying the business problem that is trying to be solved.

- End-User Hardware: 22% of Total IT Budget Spend.

- Server Technology: 9% of Total IT Budget Spend. Pain points include identifying the right solution and seeing if the purchase will require other system upgrades.

- Networking: 4% of Total IT Budget Spend.

- Storage and Backup: 10% of Total IT Budget Spend.

Top 5 IT Challenges Expected in 2020

Businesses are looking for technology vendors and service providers to be an additional tool in their arsenal. They need additional help to navigate through all the pain points out there.

These challenges include:

- Keeping IT infrastructure up to date

- Balancing IT Tasks and Improvement Projects

- Upgrading outdated software

- Following security best practices

- Convincing Business Leaders to Prioritize IT

Small businesses need more guidance than large enterprises when it comes to security best practices and maintaining disaster recovery policies. Whereas, enterprises will need more help with implementing new tech into their environment.

Future Tech

As for new tech adoptions, there are a few categories that are expected to be adopted by 2021. Enterprises with 5000+ employees will be the ones adopting these tech solutions first.

- AI Technology

- Hyperconverged Infrastructure

- Edge Computing

- 5G Technology

- Serverless Computing

- Blockchain Technology

In Conclusion

To summarize, the results show a healthy global economy. Aging technology in the workplace and more sophisticated security threats has IT spending up year-over-year.

We at Zunesis, are prepared to assist you with your 2020 technology initiatives. From assessments to support and more, schedule a meeting with Zunesis to discuss how we can help you reach your technology goals.

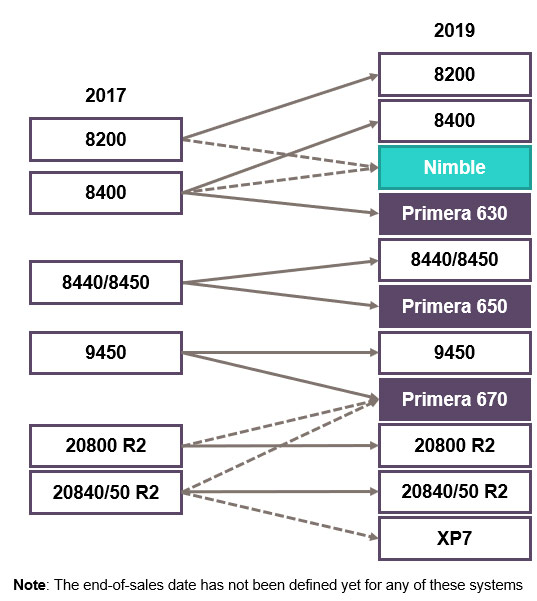

Next Generation of 3PAR

If you were at HPE Discover in June (2019) or if you follow HPE technology development, then I know you’ve heard about HPE Primera, HPE’s newest Tier-0 storage array and an evolution of the HPE 3PAR StoreServ Family. Since the announcement, I’ve been getting a few questions about Primera from our clients. It seemed appropriate to answer some of those questions in this month’s post.

What is HPE Primera?

HPE Primera is a Tier-0 enterprise storage solution designed with extreme resiliency (100% uptime guarantee) and performance targeted at the most critical workloads that simply cannot fail. The key word here is “critical”. HPE Primera is not intended to be a default replacement for the HPE 3PAR StoreServ 8000 and 9000 arrays. Your requirements could certainly dictate an HPE Primera solution, but, Nimble (HPE Tier-1 solution) may be a better fit.

At launch, HPE Primera includes three models: HPE Primera 630, HPE Primera 650, and HPE Primera 670. Each model is available as an all-flash version (A630, A650 and A670). These models come in 2 and 4-Node versions. In the graphic below, you can see how the current HPE Primera models might line up with the HPE 3PAR StoreServ and Nimble Models:

Isn’t Primera just the latest 3PAR Array?

Well, in many ways, the answer is yes. But, more precisely it is an Evolution of the HPE 3PAR StoreServ Family. It is the same in that:

- You can have up to 4-Nodes in a Single Array.

- The Nodes are Active/Active.

- The architecture for each node is based on Intel Processors, ASICs and Cache.

- There are all flash and Hybrid options.

- They all support Thin Provisioning, Deduplication, and Compression.

- Many of the feature sets are identical (i.e., Remote Copy, Virtual Copy, Multi-tenancy, Virtual Domains, etc.) across the arrays.

- Management is done through the SSMC Interface.

- There is a Service Processor to manage notification, upgrades, phone home to HPE, etc.

- Both come with all-inclusive software (no separate licenses).

- Both are all integrated with HPE InfoSight.

HPE Primera is different from the 3PAR StoreServ arrays in these ways:

- The Gen 5 ASIC in the HPE 3PAR StoreServ was designed for all-flash and uses a distributed cache where the CPU utilizes the Control Cache and the ASIC the Data Cache. The HPE Primera is designed for NVMe and has a Unified Cache.

- The Service Processor on the HPE 3PAR StoreServ is a separate physical or virtual appliance. On the HPE Primera the Service Processor is integrated into the Nodes.

- In the initial launch the HPE Primera will only support Fibre Channel Connectivity.

- The HPE 3PAR StoreServ supports CPG’s utilizing RAID 0, 1, 5 or 6. The HPE Primera utilizes only RAID 6.

- Because of the significant change in node architecture and some advancement in features, HPE Primera uses a different InForm OS Code Base than the HPE 3PAR StoreServ Family of arrays.

Is the HPE 3PAR StoreServ Family (8000, 9000) End of Life now?

The short answer is No. HPE has not announced the End of Life (EOL) for the HPE 3PAR StoreServ. When an EOL announcement is made, customers will still be able to purchase them up to 6 months afterward. Hardware upgrades will continue to be made available up to 3 years after the announcement and support will be provided for 5 years after the EOL announcement. This is HPE’s normal cycle when an EOL is announced for an enterprise solution and it will hold true for the HPE 3PAR StoreServ family.

If I’m looking at a 3PAR now, shouldn’t I just go with the Primera instead?

Not necessarily. Remember, there has been no EOL announcement made for the HPE 3PAR StoreServ. And you’ll have 5 years of support once the announcement is made. Also, the Primera is designed for the most Critical workloads in terms of availability and performance requirements. The HPE 3PAR StoreServ or a Nimble Array may line up more appropriately with your unique requirements.

In Summary

HPE Primera is an evolution of the HPE 3PAR StoreServ family and it will fill the needs of Tier-0 workloads. While many of the features and functionality are the same between Primera and StoreServ families, there are also significant differences in architecture. For many, the current HPE 3PAR StoreServ Family (8000/9000) may still be the right fit. For others, a Nimble solution may be the best solution. Contact Zunesis to find the right storage solution for your organization.

Synergy is the interaction or cooperation of two or more organizations, substances, or

other agents to produce a combined effect greater than the sum of their separate effects.

HPE Synergy

HPE Synergy is a composable infrastructure which treats computing, storage, and devices as resources that can be pooled together and used as needed. An organization has the ability to adjust the infrastructure depending on what workloads are required at the time. This allows for an organization to optimize IT performance and improve agility.

The best definition I found for Composable Infrastructure is the following:

Compose your infrastructure for any workload.

Some History

HPE started shipping the Synergy Platform during the first half of 2016, over 3 years ago. It is hard to believe this platform has been around for 3 years already.

In general, the Synergy platform provides options in compute, storage and networking. It offers a single management interface and unified API to simplify and automate operational complexities. It helps to increase operational response to new demands.

Composer and Image Streamer Management

Using the Composer and Image Streamer management, an organization has the software-defined intelligence to rapidly configure systems to meet the requirements and needs of the organization. Using HPE composer’s integrated software-defined intelligence, you are able to accelerate operations using a single interface.

Synergy Image Streamer enables true stateless computing for quick deployments and updates. This is a new approach to deployment and updates for composable infrastructure. This management appliance works with HPE Synergy Composer for fast software-defined control over physical compute modules with operating system provisioning.

The Synergy platform provides a fully programmable interface that allows organizations to take full advantage of potential vendor partnerships for open-source automation. Tools such as Chef, Docker, and OpenStack also work with the Synergy Platform. Using these tools an organization is able to seamlessly integrate multiple management tools, perform automation and future-proof the data center.

Infosight and Synergy

HPE has also announced Synergy Support in HPE InfoSight. InfoSight is a cloud-based artificial intelligence (AI) management tool that came with the Nimble purchase. HPE has expanded the platform to include HPE Servers including Synergy and Apollo systems.

It includes the Predictive Analytics, Global Learning and the Recommendation Engine. The AI Engine provides data analytics for server security and predictive analytics for parts failure. Future capability will include firmware and driver analytics.

The Global Learning aspect provides a server wellness dashboard, a global inventory and performance and utilization information. The Recommendation Engine will provide information to eliminate performance bottlenecks on the servers.

Built with the Future in Mind

The Synergy platform is built with the future in mind. From the Management analytics to new compute platforms, to higher power requirements, to increased networking bandwidth, this platform is ready. Architectures are evolving to memory-driven computing, 100Gb networking and non-volatile memory (NVM).

The Synergy frame has been architected to handle these new technologies and more. Each HPE Synergy bay is ready to support future technologies such as Photonics, future CPUs, GPUs and new memory technologies.

For more information on HPE Synergy, Contact Zunesis today.

In Search of the Holy Grail (and avoiding the killer rabbit)

In this post, I’m going to be writing about a solution from HPE to help improve the management of IT Infrastructure. First, I want to say that I have come to understand the term “IT Infrastructure” can be used very broadly, depending on the perspective of the Speaker or Writer and their audience. So, before I get started, let me define what the term means for me.

When I talk about IT Infrastructure components, I am referring to the hardware and software that provide the foundation for Applications supporting the line of business. The Business Applications will include email, document management, ERP and CRM Systems, etc. The foundational components that support these applications include Compute, Storage, and Networking hardware as well as Operating Systems and Hypervisors. In my mind, these foundational pieces are the IT Infrastructure.

Managing Can be a Challenge

Management of IT Infrastructure has long been a challenge for Systems Administrators. Once we moved past the mainframe dominated environment, to a distributed architecture, the number of devices and operating environments (operating systems and hypervisors) grew very quickly. Each component of an IT Infrastructure requires configuration, management, and monitoring (for alerts, performance, capacity, etc.) Of course, each device and operating environment comes with their own management and monitoring tools, but, because of the disparate toolset, the burden of correlating the information from each of these sources falls on the shoulders of Systems Administrators. This task of monitoring and correlating data from our IT Infrastructure can be incredibly time consuming. And, because our days are often filled with the unexpected, it is difficult to be consistent in our execution of the monitoring/correlation tasks.

To help ease this burden, the industry has seen the introduction of many applications over the years, designed to aggregate alerts and performance metrics. These tools certainly help, but they can often fall short.

What do we do with the information they are presenting to us?

How do we make sense of the data?

Can these tools help us understand trends in utilization, predict resource short-falls, proactively warn of component failures?

And, can they provide any correlation in the context of analytics data collected from thousands of similar environments from around the world?

That would be the Holy Grail of IT Management. Wouldn’t it?

Okay, a mid-post pause. My reference to the Holy Grail (and the killer rabbit) is from the movie, Monty Python and the Holy Grail. I only used it here because some of my Zunesis Colleagues have written posts using various movie references and I felt the need to respond with my own. I’m older than many of my colleagues so my reference may be a little more dated. However, I think the movie is iconic and will still be familiar to most.

Okay, now back to the discussion of IT Management and the challenges we face. To borrow a phrase from Monty Python, “…and now, for something completely different…”.

HPE InfoSight

In April of 2017, HPE completed the acquisition of Nimble Storage. This acquisition introduced a great storage solution into the HPE Storage family, but, one of the biggest drivers for this acquisition was InfoSight Predictive Analytics. At the time, InfoSight collected data from thousands of sensors across all deployed Nimble arrays globally. This data was fed into an analytics engine allowing global visibility and learning to provide predictive problem resolution back to each Nimble user. The analytics allowed many problems to be resolved, non-disruptively, sometimes before the end-user knew it was a problem.

So, in addition to providing localized alerting, phone-home support, performance data, resource trending, System Administrators now had a tool that could act on their behalf and provide correlations that wouldn’t be possible without the global context.

At the time of the acquisition, HPE committed to leveraging InfoSight for other HPE solutions over time. They have been honoring that commitment ever since. Very quickly they included InfoSight support for 3PAR StorServ, StoreOnce, and RMC. These were in addition to the existing support for Nimble Arrays and VMware.

As of January 7th of 2019, HPE officially included support for Gen 10, 9, and 8 ProLiant Servers, Synergy, and the Apollo Server families. This recent announcement means that many key components of the IT Infrastructure are now part of the InfoSight Predictive Analytics environment.

For HPE storage solutions, HPE InfoSight Predictive Analytics answers questions like:

- How has my data usage trended?

- When am I going to run out of capacity?

- What if I ran these apps… on the same array?

For the newly announced HPE Server environment HPE will provide:

- Predictive analytics to predict and prevent problems

- data analytics for server security

- predictive data analytics for parts failure

- Global learning that provides wellness and performance dashboards for your global inventory of servers

- global inventory of servers

- A recommendation engine to eliminate performance bottlenecks on servers

For VMware environments, InfoSight Cross-stack Analytics identifies:

- Noisy Neighbor

- Inactive VMs

- Latency Attribution – identify root cause across host, storage, or SAN

- Top Performing VMs – Visibility into Top 10 VMs by IOPS and Latency

Benefits of InfoSight

Based on an ESG Report titled, “Assessing the Financial Impact of HPE InfoSight Predictive Analytics”, published in September 2017, InfoSight provided the following benefits based on a survey of nearly 500 users:

- 79% lower IT operational expenses.

- 73% fewer trouble tickets in the environment.

- 85% less time spent resolving storage-related trouble tickets.

- 69% faster time to resolution for events that necessitate level 3 support.

- The ability to manage and troubleshoot the entire infrastructure environment from a single, intelligent platform.

HPE InfoSight is an application of AI that is here today and will continue to grow in the IT Infrastructure components it supports as well as the benefits it provides. If you have an HPE environment today, you’ll want to find out if HPE InfoSight can be leveraged to help you better manage your IT Infrastructure.

Future of Data Growth

I’m certain anyone reading this is well aware of the statistics about Data growth and how it is impacting storage requirements across all industries. This isn’t a new challenge in our industry, but the conversation does have an added twist when we consider the impact of IoT. We commonly read about companies experiencing anywhere from 20% to 50% year over year growth. The terms “exploding!”, “explosive”, and “exponential” are usually found in articles associated with data growth (and now I’ve used all three in one post). While this data growth continues to be spurred on by the traditional sources associated with business data (databases, documents, email, etc.), we are seeing even greater capacity requirements being generated by IoT devices.

For this post, when I speak of data from IoT, I am lumping together data generated by security video, temperature gauges, vibration sensors, connected cars… you get the idea. In fact, according to some sources, IoT data is 10x the growth of that associated with traditional data sets. And, IDC estimates IoT devices will grow to 28.1 Billion by 2020. So, data collected from these devices, and storage solutions needed to maintain this data, will become increasingly important.

Storage for IoT Data

Among our clients, we see a tremendous growth in the need for storage to maintain Security Surveillance Video. Beyond simply providing a place for video streams to be written, our clients are analyzing the video; utilizing software to perform anomaly detection, facial recognition, etc. I shared a couple posts recently, written by two of my colleagues at Zunesis, that expands on this topic. And, Analytics isn’t isolated to video only. The value of IoT devices is that they capture data at the edge, where it is happening, and this is true across all IoT devices. Once collected, software can perform analysis of the data to derive meaning beyond the data points and, in many cases, produce actionable insights. So, storage required for IoT data needs to be able to hit large scale quickly and have performance characteristics that allow analytics in near real time. And, of course, this storage still needs to provide reliability and availability associated with any business-critical data.

To meet the storage requirements defined above, HPE has created a hardware platform and partnered with two software defined storage (SDS) companies to provide solutions for scale-out storage that will grow from a couple hundred terabytes to petabytes and provide both the reliability and performance required of the data generated by the ever-expanding number of IoT devices. The HPE hardware is part of the HPE Apollo family. The software that utilizes this hardware comes from Software Defined Storage providers, Qumulo and Scality. Here is a summary for each of these solution components:

The Apollo Family of compute and storage:

The Apollo Family of systems from HPE are each designed to provide compute, storage, and networking that meet the needs of both scale-up and scale-out requirements. They are targeted at workloads supporting Big Data, analytics, object storage and high-performance computing.

The scale-out compute part of the HPE Apollo System portfolio includes the Apollo 2000 System for hyperscale and general-purpose scale-out computing, the Apollo 4000 System Family is targeted at Big Data analytics and object storage while the Apollo 6000 and 8000 Systems are designed to support HPC and supercomputing. Density, ease of management (all incorporate HPE iLO management), and efficient rack-scalability are features shared by all members of the portfolio.

Qumulo File Fabric (QF2)

Qumulo is a software defined scale-out NAS that scales to billions of files in a flash-first design. With the Apollo/Scality solution, you can scale from 200TB to over 5PB of usable capacity. This solution uses advanced block-level erasure coding and up-to-the minute analytics for actionable data management. The file services provided by Qumulo are also supported in the public cloud, currently on Amazon Web Services.

Use cases include:

- Media & Entertainment

- Security Video

- Life Sciences & Medical Research

- Higher Education

- Automotive

- Oil & Gas

- Large Scale Online/Internet

- Telco/Cable/Satellite

- Earth Sciences

Scality RING

Scality is a a software defined Scalable Object Storage solution that supports trillions of objects in a single namespace. With the Apollo/Qumulo solution, you can scale to over 5PB of usable capacity. The access and storage layers can be scaled independently to thousands of nodes that can be accessed directly and concurrently with no added latency.

Use cases include:

- Media & Entertainment

- Security Video

- Financial Services

- Medical Imaging

- Service Providers

- Backup Storage

- Public Sector

So, yes, data footprint is growing and won’t be slowing down anytime soon. If your data set is outside the traditional business data sets and requires scale-out storage that supports large numbers of files and the ability to perform actionable analysis quickly, then you probably need to look outside of the traditional scale-up storage solutions and look at solutions purpose-built for these large-scale workloads. HPE Apollo, Qumulo, and Scality are a great starting point for your research.

2017 was the year of worldwide memory shortages and price spikes. Here’s what the industry predicts for 2018.

Demand for flash memory is estimated to be growing over 45% year over year.

By the end of 2018, 3D NAND will achieve the majority of the market, with full capacity expected from the new 3D NAND fabrication facilities, relieving the shortage of NAND flash that hit the market late 2016 through 2017. This should offer some relief in NAND flash prices, which doubled between 2016 and the peak in 2017. However, prices lower than those in early 2016 may not occur again until 2019, or possibly later.

Several analysts expect that as a result NAND flash prices per TB of storage will decline between 20-30% from 2017 to 2018. SSDs became the largest consumer of NAND flash, starting in Q1 2017, surpassing smart phones. The movement from consumer products to client and enterprise products should help moderate the traditional NAND flash price cyclicality. Enterprise SSDs showed particular growth in 2017, with 150% y/y growth in shipped capacity. With declining NAND flash prices, 2018 should see increasing demand for NAND flash, particularly in the Enterprise market, where NAND flash is rapidly becoming primary storage in large storage arrays.

This is important, because 2018 is poised to be a year if unprecedented growth in the storage market.

Higher resolution video with higher dynamic range and more cameras are driving storage demand as is the growth of IoT devices and applications. While IoT data itself is short-term, the analytics that run on IoT edge devices, such as HPE Edgeline 4000, will create analytics data that will need to be protected long-term with low-cost storage solutions. It is also widely anticipated that 2018 will see a spike in the rise of metadata where storage arrays store both the data itself and interesting metadata about that data with a need for the metadata to be indexed and searchable.

The increase in the number of sensors, including cameras and the need for rapid processing of this data is creating a greater need for local and network edge storage as well as storage in big data centers (the cloud). Artificial intelligence and lowering costs for high-performance storage are driving new storage hierarchies. Flash will become primary storage for many applications in 2018 with object-based secondary storage and archiving roles in many data centers. At HPE, this challenge is being met by joining the Apollo storage platform with Qumulo file system software. Qumulo creates computing nodes on the Apollo platform, forming a cluster that has scalable performance and a single, unified file system. An Apollo/Qumulo solution can scale to billions of files and costs less than legacy storage appliances.

Even with the development of memory-centric computing technology, the need to move large amounts of data around data centers will increase. This will also drive the use of flash memory primary storage, driving hard disk drive storage into secondary storage applications that focus on the costs of storage and don’t require fast data rates.

Data security and privacy will be major drivers of storage investment in 2018. The rash of ransomware episodes as well as hacking data theft incidents in 2017 showed the general vulnerability of many enterprise environments. Many companies are touting various backup storage solutions as providing protection from ransomware. We see tape gaining respect as a valued element of many data protection solutions because it offers an offline “air-gapped” backup copy as well as Appliance solutions, like the HPE StoreOnce, that can be secured from encryption.

2017 saw NVMe firmly established as the technology that will transform solid-state storage systems and leading to flash memory as primary storage for many applications in 2018. Overall storage demand will continue to drive growth in stored capacity in all the major storage products: flash memory, HDDs and magnetic tape, with most of this growth in public and private cloud infrastructures. Data security and privacy will be major drivers for enterprise storage investment in 2018.

Wrapping up, all signs point to exciting new applications hitting the market in 2018 that will require a storage-demand surge. Good news is that supply should be protected by the rapid advances in 3D NAND flash.

In recent months, HPE has announced two acquisitions that significantly impact their online storage portfolio. In January, it was announced that HPE would be acquiring SimpliVity, a leader in the hyperconverged space. Just two months later, in March, we heard that HPE would be acquiring Nimble, a manufacturer of all-flash and hybrid-flash storage solutions backed by a powerful predictive analytics tool called InfoSight. The acquisition of these two solutions gives HPE one of the strongest storage portfolios in the industry.

The HPE online storage portfolio now includes the following:

• MSA

• StoreVirtual (LeftHand)

• Nimble

• SimpliVity

• 3PAR

So, with so many options, the questions you may be asking are, “Where does each fit into my IT Infrastructure?” or “How do I determine which of these is right for my environment?” There is certainly some overlap amongst these options, but there are also clear reasons why you might choose one over the other.

In this post, I want to provide a summary of each of the solutions and include a few thoughts on why you might consider each as a solution for your environment. I will not cover the XP in this post because it is a solution that has a very narrow set of use cases, and most infrastructure needs will be met by the other online storage solutions in the HPE portfolio.

The solutions are organized here from entry-level to enterprise class.

MSA

The MSA is a family of storage solutions that’s been around for some time and continues to evolve as storage technologies change. This is generally considered an entry-level array. The MSA two controller array offers Block storage over SAS, Fibre Channel, or iSCSI. Despite its entry-level categorization, the MSA is a solution that can provide up to 960TB of RAW capacity and nearly 200,000 IOPS.

The MSA is a family of storage solutions that’s been around for some time and continues to evolve as storage technologies change. This is generally considered an entry-level array. The MSA two controller array offers Block storage over SAS, Fibre Channel, or iSCSI. Despite its entry-level categorization, the MSA is a solution that can provide up to 960TB of RAW capacity and nearly 200,000 IOPS.

In addition to the capacity and performance, this array provides some impressive features, including:

– Thin Provisioning

– Automated Tiering

– Snapshots

– Array-based Replication

– Quality of Service

Consider the MSA if you are trying to meet the needs of a few hosts with a predictable workload profile. This solution is designed with smaller deployments in mind and where affordability is a key factor. If you are looking for deduplication, compression, or plan to scale your environment significantly, the MSA is not for you. We have used the MSA for small VMware environments and as Disk Targets in a backup solution.

StoreVirtual

StoreVirtual defines an operating system for providing scale-out, software defined storage solutions. StoreVirtual is based on the LeftHand operating system. LeftHand was a company HP acquired in October of 2008 to give them an iSCSI solution in their portfolio. The LeftHand Operating System is designed to run on most x86-based hardware and can be deployed as a virtual machine, a hyperconverged appliance, or as a dedicated storage array.

StoreVirtual defines an operating system for providing scale-out, software defined storage solutions. StoreVirtual is based on the LeftHand operating system. LeftHand was a company HP acquired in October of 2008 to give them an iSCSI solution in their portfolio. The LeftHand Operating System is designed to run on most x86-based hardware and can be deployed as a virtual machine, a hyperconverged appliance, or as a dedicated storage array.

Regardless of how it is deployed, the StoreVirtual solution provides block storage over iSCSI or Fibre Channel. Capacities can vary depending on the method that is deployed but can go up to 576TB of RAW capacity on the SV3200 Appliance Storage Node.

The StoreVirtual uses an all-inclusive licensing model, and the feature set includes:

– Thin Provisioning

– Automated Tiering

– Snapshots

– Array-based Replication

– Multi-site Stretch Clusters

– Built-in Reporting

Like the MSA, StoreVirtual is intended for smaller deployments with predictable workloads. As a scale-out solution, the StoreVirtual provides an easy path for increasing capacity and performance simultaneously by adding one or more StoreVirtual Nodes. The StoreVirtual solution is a relatively affordable solution for the features it provides. And, if you are looking to take advantage of existing compute and storage hardware, StoreVirtual may be a good choice. We see the StoreVirtual solutions deployed in smaller to mid-sized virtual environments.

SimpliVity

To be clear, SimpliVity is not a standalone, online storage solution. It is a hyperconverged solution that provides Storage, Compute, and Networking services in one converged solution. I am including it in these summaries because it includes storage as an important part of the overall solution and would be an alternative to needing to provide a separate storage solution for your VMware environment.

To be clear, SimpliVity is not a standalone, online storage solution. It is a hyperconverged solution that provides Storage, Compute, and Networking services in one converged solution. I am including it in these summaries because it includes storage as an important part of the overall solution and would be an alternative to needing to provide a separate storage solution for your VMware environment.

SimpliVity is specifically designed to provide all the infrastructure beneath the hypervisor layer. At this time, it is specifically for VMware environments. As a converged solution, SimpliVity provides everything you would need to support your environment. (Yes, that means backup as well!)

Here is a list of the features you’ll find in a SimpliVity solution:

– Servers & VMware

– Storage Switch

– HA Shared Storage

– Backup & Dedupe

– WAN Optimization

– Cloud Gateway

– SSD Array

– Storage Caching

– Data Protection Apps (Backup & Replication)

– Deduplication

– Compression

This solution is going to be more expensive than the MSA or StoreVirtual offerings; but, again, it provides all the infrastructure for your VMware environment. It’s more than just a storage solution. So, if you are looking to consolidate your infrastructure significantly and the workloads you are supporting have all been virtualized on VMware, this solution may be worth looking at for you.

Nimble

The Nimble solutions offer Block storage in all-flash and hybrid-flash models delivered over iSCSI or Fibre Channel. The current offering from HPE will provide up to 294TB of RAW capacity on the hybrid-flash solution. Of course, compression and deduplication will provide much greater effective capacity dependent on data types.

The Nimble solutions offer Block storage in all-flash and hybrid-flash models delivered over iSCSI or Fibre Channel. The current offering from HPE will provide up to 294TB of RAW capacity on the hybrid-flash solution. Of course, compression and deduplication will provide much greater effective capacity dependent on data types.

The all-inclusive features of the Nimble family include:

– Thin Provisioning

– Automated Tiering

– Application Aware Snapshots

– Deduplication (all-flash)

– Quality of Service (all-flash)

– Array-based Replication

– Encryption

– InfoSight Predictive Analytics

This solution is designed to handle mixed-workloads and is perfect for mid-sized virtualized environments. InfoSight Predictive Analytics is a significant part of this solution, and I wouldn’t be surprised if it were integrated with other HPE storage solutions in the future.

Taken directly from the HPE QuickSpecs, the InfoSight Analytics provide:

– Proactive resolution. InfoSight automatically predicts and resolves 86% of problems before you even know there is an issue.

– Solves storage and non-storage problems. By collecting and correlating sensors across the infrastructure stack, InfoSight uncovers problems spanning from storage to VMs. In fact, 54% of the problems InfoSight resolves are outside of storage.

– Prevents known issues with infrastructure that learns. If a problem is detected in one system, InfoSight begins to predict the issue and inoculate other systems. Every system gets smarter and more reliable through collective installed base insights.

– The support you’ve always wanted. Automation and proactive resolution put the focus on prevention, streamlining the process, and connecting you directly to support expertise. No more answering routine support questions, sending log files, or attempting to recreate issues.

Nimble should be a consideration for any virtualized environment where you are looking for ease of use, great reporting, and performance.

3PAR

Of course, this has been the workhorse for the enterprise storage offering from HPE since 2010. Depending on the model, the 3PAR Solution can start with 2 Controllers and go up to 8 Controllers for a single array. The 3PAR has both a hybrid and an all-flash solution. Capacities can scale to 6PB RAW in the new 9000 series of the 3PAR family. The 3PAR array delivers Block over iSCSI and Fibre Channel and can also supports SMB, NFS, and FTP/FTPS natively.

Of course, this has been the workhorse for the enterprise storage offering from HPE since 2010. Depending on the model, the 3PAR Solution can start with 2 Controllers and go up to 8 Controllers for a single array. The 3PAR has both a hybrid and an all-flash solution. Capacities can scale to 6PB RAW in the new 9000 series of the 3PAR family. The 3PAR array delivers Block over iSCSI and Fibre Channel and can also supports SMB, NFS, and FTP/FTPS natively.

The all-inclusive feature set includes:

– Thin Provisioning

– Thin Deduplication

– Thin Compression

– Snapshots

– Array-based Replication (add-on license)

– File Persona (SMB, NFS, FTP/FTPS)

– Automated Tiering

– Flash Cache

– Quality of Service

– Non-disruptive LUN Migration

– Array to Array Migration

– Reporting

– Multi-Tenancy

The 3PAR Array should be considered for a multi-workload environment where performance, availability, and scale are the top priorities.

Which One Is Right for You?

As mentioned at the beginning of the post, the goal here was to provide a summary of the primary online storage solutions now offered by HPE and to give a little guidance on where they might fit. Each of these solutions represent a different architecture, and those architectures should be understood clearly before making any final decisions. At Zunesis, we can help you understand which of these solutions might fit your needs and can walk you through the architectures of each.