We are all winners when there is competition in the semiconductor industry

My personal narrated history of the semiconductor market in my lifetime.

For many years, I have been labeled by my friends and peers in the industry as being an AMD fanboy. In truth, I am a fan of competition and a free market to drive innovation and keep prices affordable for everyone. In this blog, we will dive into a little history about AMD. They are very relevant today not only in the PC market but in the Datacenter as well.

Some history

When people think of computer processors, typically the brand Intel comes to mind. They have been pioneers in the consumer and enterprise microprocessor industry for more than half a century. The company was founded in 1968 in California by Gordon E. Moore a chemist and Robert Noyce who was a physicist.

Throughout much of the twentieth century advancements in computer processing could most notably be attributed to the Intel corporation. In the mid-1970s, something interesting happened in the microprocessor market. Another American company AMD or Advanced Micro Devices, known at the time to provide licensed second-source manufacturing for Intel and others started to develop and sell their own unique microprocessor designs.

This was the catalyst for consumers and OEMs to have a choice in the marketplace for whom provided their computer processors. Until that time, Intel had solely provided or licensed others to make the processors for the IBM personal computer and other enterprise products.

1980s-1990s – AMD can compete … mostly

Throughout the 80s and 90s, AMD was making licensed copies or clones of Intel processors with relative success. In 1996, AMD released its first in-house designed x86 processor. This competed with the Intel Pentium processors operating at 75-133Mhz. They weren’t developing anything revolutionary. They were providing a cheaper alternative to Intel and driving innovation to some degree.

This is the era in which I became an AMD customer. At the time, I could not afford an Pentium based PC. I cobbled together components that I could afford to build my first computer. It was an AMD K6 266Mhz processor and had 16Mb of RAM. It wasn’t much but I could do my schoolwork on it and it played a few games.

2000-2010 – Things are looking up for AMD

In the early 2000s, AMD released their socketed Athlon processors. They were true game changers as they supported features like on-die L2 cache and double data rate RAM. Later in 2003, they introduced to the market the first 64bit processor. Beating Intel to the punch and taking the innovation crown for a short period of time.

In 2007, AMD introduced their first server class processor called the Opteron. The Opteron was a very powerful and viable alternative to Intel’s XEON processor. Budget conscious businesses had another option when choosing servers for their data centers which wouldn’t break the bank. During this time frame, multi-core processors were introduced to the marketplace. AMD pursued this trend with positive results.

“At this time in my life, I was working in IT. I finally had some money to build the computers I wanted to build. Again, I chose AMD because of their price-to-performance ratio compared to Intel. My thought process involved simple mathematics. If I was able to achieve 90 percent of the performance of the Intel equivalent for 60 percent of the cost, then it seemed like a good choice. “ #lawofdiminishingreturns

2010-2015 – The Dark ages of which we do not speak

During this time frame, AMD is handedly beaten by Intel by most legitimate metrics. They did not innovate or develop new core architectures but chose to pile on the physical processing cores. A strategy that failed them for the better part of a decade.

The consumer products weren’t competitive. The server processors were relegated to budget options and entry level servers for small businesses. Although I owned many computers comprised of this architecture, it was a low point for me. I did lose some faith in the company. My concerns centered around the lack of competition in the marketplace. Monopolies are good for no one except Mr. Monopoly whoever that may be.

2016-Today – The enlightenment and salvation

In 2016, AMD introduced the Ryzen or Zen microprocessor architecture to the world. This revolutionary microarchitecture displayed IPC (instruction per clock-cycle) gains of almost 52 percent compared to the previous Bulldozer architecture. AMD was back in the game in a big way.

In 2016, AMD introduced the Ryzen or Zen microprocessor architecture to the world. This revolutionary microarchitecture displayed IPC (instruction per clock-cycle) gains of almost 52 percent compared to the previous Bulldozer architecture. AMD was back in the game in a big way.

In the consumer market, AMD sells processors that were faster than Intel offerings and twice the price. In the enterprise, AMD has continued to increase core counts with this newest architecture. It has extinguished some of Intel’s market share in the Datacenter.

TODAY

AMD has released its second iteration of the Zen architecture called Zen 2. The enterprise offering is called 2nd Gen EPYC. This architecture is truly displacing the Intel offering because it can compete on more than one level. The IPC is on par and often exceeds the Intel equivalent. The core counts far exceed what Intel has by offering a 64 core/128 thread processor named EPYC 7742.

This processor by itself could facilitate a virtual environment for most small to mid-sized businesses. The processor is so revolutionary that virtualization/hypervisor companies are changing their licensing models in fear that a single socket host would undercut their profits from licensing.

Who offers it?

HPE, a company who has always been an advocate and an ally to AMD. They sell consumer devices outfitted with the newest AMD RYZEN processors based one ZEN 2 architecture. The servers utilize the newest 2nd Gen EPYC processors based on the same microarchitecture.

These solutions offer better performance. Pricing is competitive to the point where they displace any Intel offering. As an unabashed AMD fanboy, I urge you to look at the metrics and decide for yourself. In almost any computing workload, AMD is a competitive and cost effective option.

Contact Zunesis today for more information on AMD and other IT solutions for your organization.

Additional Resources:

Two AMD Processors Crush four Intel Xeons in tests

Intel insists Xeon vs Epyc benchmark fight was fari, amends speed test claims anyway

Epic Win: AMD’s 64-core 7nm Epyc CPUs Leave Xeon Lying in the Dirt

Heart Disease and Technology

What if heart conditions could be monitored from home?

What if there were predictive analytics to predict someone’s risk of heart disease?

We are getting closer and closer to this being a reality. February is American Hearth Month. Heart disease is the leading killer in the world. It is responsible for over 17 millions deaths each year. This could rise to 23 million by 2030.

The medical costs are astronomical. The American Heart Association states the the annual cost of this disease is over $500 billion in the United States alone.

This topic is close to my heart. Many members of my family as well as friends have suffered from heart complications and strokes. If these evolving technologies were available back then, it would have made a major difference.

More and more technologies are approved by the FDA every day. From wearable technology to digital stethoscopes, technology is being developed to assist humans with heart conditions. I am going to highlight just a few of the products that are gaining traction in the healthcare industry. The cardiovascular disease technology market is expected to exceed $40 billion by 2030.

Digital Stethoscope

Eko‘s digital stethoscope uses algorithms to assist with the treatment and prevention of heart disease. Recently, a suite of algorithms were approved by the FDA. The algorithms can alert technicians of the presence of heart murmurs and atrial fibrillation (AFib) during a physical exam. This is essentially converting the classic stethoscope into an early detection tool.

Eko’s AI is able to identify the heart murmurs with 87% sensitivity and 87% specificity. The average traditional stethoscope only has a sensitivity of 43% and specificity of 69% when detecting valvular heart disease. When detecting AFib, AI can detect 99% sensitivity and 97% specificity when analyzing the one-lead ECG tracing. The algorithms reports fast or slow heart rates which is typically indicative of heart disease.

A simple part of your annual exam that has been the front line tool for over two centuries could be the game changer when detecting life threatening conditions. Treating these conditions early could make all the difference.

Technology developed using artificial intelligence (AI) could identify people at high risk of a fatal heart attack at least 5 years before it strikes. This is according to new research funded by the British Heart Foundation (BHF).

Remote Management of Heart Disease

Some of the biggest trends around cardiovascular disease revolve around remote patient monitoring. Remote Patient monitoring is where consultations can be consulted over video calls. Patient reads and information are accessible through a digital platform. The patient uses wearables such as skin patches, accessories and smart clothing to monitor the patient’s condition.

Beyond disease management, remote patient monitoring can help to see if patients are adhering to their current regiments. It is can also be vital for clinical trial monitoring and pre/post op monitoring. The ability to predict or prevent future cardiac events may occur based on the data being relayed. At home innovations are used to monitor hypertension, heart failure and arrhythmias.

Beyond disease management, remote patient monitoring can help to see if patients are adhering to their current regiments. It is can also be vital for clinical trial monitoring and pre/post op monitoring. The ability to predict or prevent future cardiac events may occur based on the data being relayed. At home innovations are used to monitor hypertension, heart failure and arrhythmias.

In-body microcomputers have been developed where a pea-sized V-LAP sensor sits withing the heart. The sensor provides real time data to health professionals. When pressure in the heart elevates, it sends alerts the doctors in time. It charges remotely via an external chest strap that is fitted by the patient. It can collect and transmit data to doctors at anytime.

Do you own an Apple Watch? The optical sensor can detect atrial fibrillation in the background. The market for these items will continue to grow. We live in a world where data is everywhere.

Being able to access that data from the comfort of your home, can alleviate the stress of some patients. In addition, it will help alleviate medical costs by reducing visits to the hospital.

Virtual and Augmented Reality

Virtual and Augmented Reality is the new reality for training medical staff. A training in a VR headset can put the operators in a virtual cath lab with a 360 degree view of the room and equipment. 3-D renderings of diseases vessel segments are viewable as well.

NovaRad has FDA clearance for the first surgical AR system. The surgeon wears an AR headset. He or she can superimpose and co-register a CT or MRI dataset onto a patient on the OR table. They can virtually slice through a patient to pre-plan a procedure and mark the skin for incisions.

Philips Healthcare is developing a cath lab AR system which allows interventional radiologists or cardiologists to use hand movements or voice commands without breaking a sterile field. They will be able to call up an ultrasound in their AR visor. Review CT imaging datasets that they can slice in mid-air or view 3-D holograms of the anatomy. Hand movements rotate or slice the images .

The Future

These are just a few of the technologies out there that are helping improve the lives of cardiac patients. I can only imagine the developments that are to come.

Pets suffer from heart disease as well. Colorado State University is already using cutting edge technology to assist with heart disease in dogs.

Some of these things I could only have imagined in Sci-fi films are now a reality. I only can hope that these developments will help save lives for years to come.

Zunesis already actively works with some medical institutions. My hope would be that some tools that we can provide them will take them to the next level in heart disease prevention.

Containers-What are they?

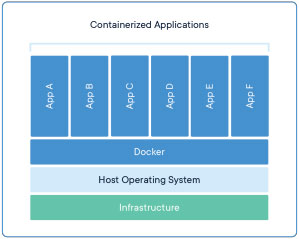

First, let’s begin with what containers are. There are a number of mature container technologies in use today, but Docker has been the long time leader. To many, the name “Docker” is synonymous with “container,” so looking at their explanation would be a good idea.

“A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. A Docker container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries and settings.”

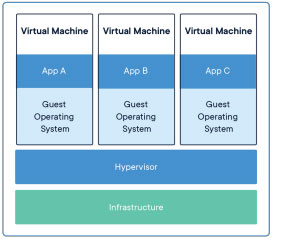

Even among IT professionals, there seems to be some confusion for people who haven’t actually used this technology. Most of us have heard of containers. We may have them powering applications in the workplace, yet still don’t really understand them. People that have mostly wrapped their heads around what virtual machines are, might still have trouble understanding container technology.

A Portable Application

Virtual machines run on virtualized hardware, which can cause a pretty significant performance hit for RAM/CPU/Disk/ and other virtualized resources. An operating system needs to be installed on the virtual machine. This can take up a large amount of disk space and system resources used by the operating system. This adds up quickly, especially with a lot of virtual machines.

Operating systems also need to boot up before they can run applications. This can introduce quite a bit of delay. Containers simply put just run applications on a virtual operating system. They don’t need to boot up, and can be spun up in seconds.

This still may be about as clear as mud for some, so I’ll break this down to a very basic level. A container is just a portable application. That might be a bit basic of an explanation, but it is still pretty accurate in my opinion.

A different use case for containers

Designing microservices, that run on multiple clouds, at scale, and orchestrated by something like Kubernetes is outside the scope of what I’d like to cover in a blog post. There is also a lot of material out there on this topic. Instead, I’ll propose a more obscure use case – running legacy software.

It isn’t pretty, but the reality is that sometimes legacy systems are still a thing. Some easy examples to pick on are legacy PBX(phone system) applications or building control applications. Those don’t normally generate revenue, but losing control of your phone system or HVAC system could definitely be an issue.

A more extreme example would be revenue generating, line of business applications that just have no suitable replacement. Maybe there is a suitable replacement, but the cost is just too prohibitively expensive when your old system still works great. Perhaps there are licensing cost changes, or having to buy large quantities of new IP phones to run on a modern PBX just isn’t in the budget. Some of these just cannot run on anything past End Of Life Windows versions such as Windows 2003, 2000, or even NT(YIKES!!!). The software vendor that designed these might have gone out of business, and these systems just never got around to being replaced because they just work and generate revenue.

WINE in a Linux container

Among many other problems, running end of life software, on end of life operating systems, is a HUGE security issue. It is difficult, if not impossible to prevent an attack using known exploits that are simply un-patchable.

What is a better way? You can run Windows applications using WINE (originally a backronym for “Wine Is Not an Emulator”), in a Linux container! This solves a lot of security issues related to the operating system. The “What happens if my 15 year old server dies?” also is solved. If you really wanted to, you could even put that antiquated application in the cloud.

Configuring WINE is also out of scope for what I’d like to cover in this post, but there is plenty information out there. Rather than pick on out of business software vendors, I “containerized” a few Windows applications that are freely available which I have actually encountered being used for actual business use. We can just pretend that they are no longer supported, and have no suitable replacement for demo purposes.

These applications are:

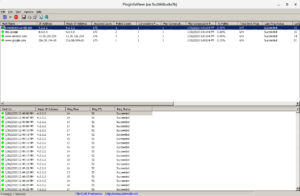

PingInfoView (a ping monitoring tool)

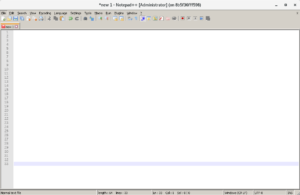

notepad++ (a text editor used by some systems/network/software engineers)

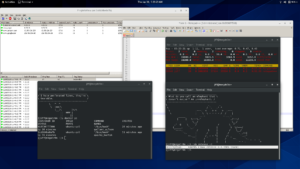

To tie it all together visually, here is a screenshot demonstrating these running on my CentOS Linux laptop:

To demonstrate that these are actually running as containers, please take notice of the container ID listed at the top of the application window(8b5f36fff598 and 5cd664be8a7b). These are listed in the output of “docker ps” and shown in the filtered output of the Linux process monitor “top.” These could be easily moved to another machine running different distribution of Linux, and perhaps into a random server on your favorite cloud host.

I hope you enjoyed this example of a fun, not so common container use case. Need help designing your infrastructure to power your applications? The friendly engineers at Zunesis have the expertise to help. Contact us today!