Decisions, Decisions, Decisions

When making the decision on what is the best solution for your infrastructure, there are a few popular options available. Options include Converged, Hyperconverged, and Disaggregated Hyperconverged. Depending on the size and complexity of your environment, this will impact which infrastructure you may choose.

Choosing between the different available architectures means having a complex understanding of both the current deployments in your data center and the scaling factors impacting your organization specifically.

Converged Infrastructure

IT Sprawl is still a very real issue for data centers. It leads to increased costs, reduced efficiency, and less flexibility. A converged infrastructure (CI) helps with this by creating a virtualized resource hub. It increases overall efficiencies across the data center using a single integrated IT management system.

A converged infrastructure (CI) aims to optimize by grouping multiple components into a single system. Servers, networks, and storage are placed on a single rack. Replacing the old silos of hardware, convergence allows IT to become more efficient by sharing resources and simplifying system management. This efficiency helps keep costs down and systems running smoothly. On the flip side, it is expensive and not the most flexible in scaling.

While converged infrastructure is effective in small-scale environments, most mid-market and enterprise organizations are limited by this architecture. Its hardware is proprietary in nature and it ineffectively distributes resources at scale.

Hyperconverged Infrastructure

Hyperconverged infrastructure (HCI) was designed to fix the scalability issue, and it certainly improved things. Designed as a building-block approach, HCI allows IT departments to add in the nodes (i.e., servers, storage, or computing) as needed. It continues to simplify management by placing all controls under a single user interface.

Hyperconverged infrastructure, by leveraging commodity products across the board, significantly disrupted the financial dynamics. It was radically less expensive, at least initially than converged infrastructure while still providing most of the benefits.

Most organizations today use either a traditional CI or HCI Deployment. There are benefits and advantages to both.

While HCI has many benefits, there are some significant disadvantages. For quickly growing businesses that need an easy-to-manage architecture that embeds as many elements of modern-day computing – like disaster recovery, security, and cloud, this may not be the best solution. Hyperconverged solutions have use cases where they do not fit. This has caused problems for customers who disrupted operations by not realizing the impact some workloads would have.

While more scalable than CI, HCI still requires the interdependent growth of storage and servers. That’s a challenge with the types of workloads companies use today.

It’s hard to argue with the manageability and scalability advantages of traditional HCI platforms. IDC predicts that the HCI market revenue will grow at a CAGR of 25.2% to crest $11.4 billion in 2022. As HCI has matured, enterprises have been looking to use it to host a broader set of workloads.

There are still workloads whose performance, availability, and/or capacity demands encourage the use of an architecture that allows IT managers to scale compute and storage resources independently. A storage solution that is better for workloads whose growth is very dynamic and unpredictable.

Disaggregated HyperConverged (dHCI)

Enter in the latest solution, Disaggregated Hyperconverged infrastructure. Disaggregated hyperconverged infrastructure (dHCI) combines the simplicity of CI and the speed of HCI to create a more resilient, evolved data center architecture. There are numerous benefits to dHCI. The biggest value proposition most attractive to users today is disaster recovery as a service or DRaaS.

While not every workflow can run on a hyperconverged infrastructure, they can on a dHCI. That’s part of what makes it appealing. It doesn’t come with the restrictions of its predecessors. Ultimately, disaggregated HCI leverages similar components to converged infrastructure but leverages modern infrastructure automation techniques to enable automated, wizard-based deployment and simple, unified management at similar costs to HCI.

With dHCI, IT teams are able to focus on support and service delivery while Artificial Intelligence (AI) takes care of infrastructure management. The rise in size and complexity of data centers means that such an intelligent solution will help firms get maximum Returns on Investment (RoI) in IT equipment.

dHCI is in demand for IT managers who want the simplicity of HCI and the flexibility of converged. dHCI is simple to deploy, manage, scale and support. It is software-defined so compute and storage are condensed and managed through vCenter with full-stack intelligence from storage to VMs and policy-based automation for virtual environments are integrated throughout.

HPE Nimble Storage dHCI

HPE Nimble Storage dHCI pulls together the best elements of each type of infrastructure. Combining the simplicity of HCI management with the reliability, familiarity, and flexibility of scale of our beloved 3-tier architecture. It is essentially high-performance HPE Nimble Storage, FlexFabric SAN switches and Proliant servers converged together into a stack. Simple deployment, operation, and day-to-day management tasks have been hugely simplified with this solution.

The out-of-box experience requires very little technical experience to use and deploy the stack. Once up and running, day-to-day tasks, such as adding more hosts or provisioning more storage, are simple “one-click” processes that are simple and take up very little technician time. Storage, compute and networking can be scaled independently of each other. This further reduces the requirement for VMware/ Hyper-V licensing at scale. It reduces the costs as there isn’t a need to scale out all the components when you simply need more storage or compute.

The whole stack plugs directly into the HPE Infosight portal and support model. It automates simple support tasks so that 1st and 2nd line support are no longer needed to triage issues. dHCI plugs into this to bring this first-class support and analytics to VMware, Proliant, and FlexFabric as well as the Nimble Storage platform. With dHCI, it’s now possible to deploy an entire virtualization stack and have it monitored and supported 24/7/365 by skilled HPE engineers.

Want to learn more about these infrastructure solutions and discover which one is a good fit for your organization, request a consultation today with Zunesis.

As the founder and CEO of a Colorado-based HPE Platinum Partner (Zunesis), I have been working hand-in-hand with Hewlett Packard for more than 15 years. During that time, I have seen many technology products come and go. In the past year, I have had a chance to understand the value of HPE’s most recent acquistion – SimpliVity and this solution has really caught my eye as unique and compelling.

As the founder and CEO of a Colorado-based HPE Platinum Partner (Zunesis), I have been working hand-in-hand with Hewlett Packard for more than 15 years. During that time, I have seen many technology products come and go. In the past year, I have had a chance to understand the value of HPE’s most recent acquistion – SimpliVity and this solution has really caught my eye as unique and compelling.

SimpliVity is a next generation hyperconverged solution that takes advantage of the hyperconverged strategy while also providing new and improved solutions to the limitations that have existed in other hyperconverged solutions. First, let’s talk about business value and benefits. According to unbiased research firms like Forrester, IDC, and ESG, SimpliVity provides the following business value:

- 73% Total Cost of Ownership (TCO) savings compared to traditional IT infrastructure

- 10:1 device reduction

- Up to 49% TCO cost savings when compared to AWS

- 81% increase in time spent on new projects

- Rapid scaling to 1,000 Virtual Machines with peak and predictable performance

- One hour to provision an 8-node cluster

- 57% of customers reduced RTOs from days/hours to minutes

- 70% improvement in backup/recovery and Disaster Recovery (DR)

- Nearly half of customers retired existing 3rd party backups and replication solutions

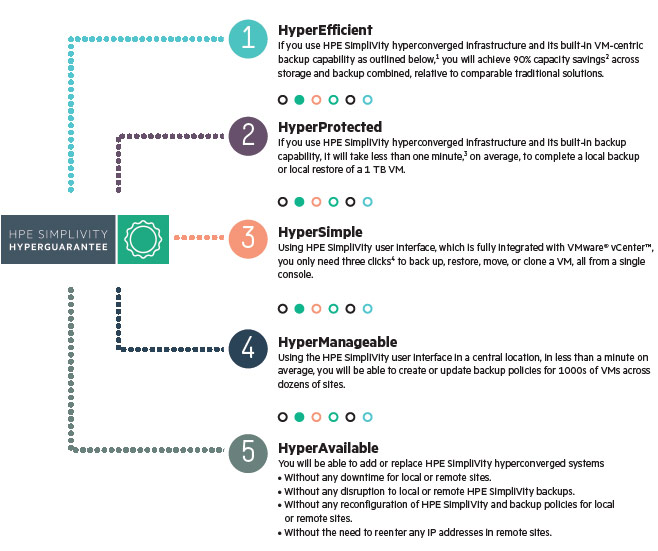

HPE SimpliVity HyperGuarantee

We are seeing enterprise clients deploy SimpliVity because of the way it protects data and provides built in disaster recovery. Enterprise clients with larger mission critical workloads find that SimpliVity is unique in the way it provides a robust and reliable infrastructure framework. SimpliVity provides a hyper-converged solution that is purpose-built for workloads that cannot afford to go down. Take a look at the next generation of Hyper-Converged technology in SimpliVity. Give Zunesis a call and let us spend time explaining how SimpliVity works and the value it can provide your business.

Why HPE SimpliVity and Zunesis? Read More

Aruba Operating System (AOS) 8.0

Aruba Operating System (AOS) 8.0 code recently went “GA” to all of Aruba’s customers. AOS 8.0 is a complete ground-up rewrite of the Aruba Operating System, giving it a better scalability and increased network performance. Here are some of the more visible enhancements to the platform.

Adaptive Radio Management (ARM) Changes

ARM is Aruba’s proprietary feature that allows AP’s to automatically negotiate power levels and broadcasting channels to avoid co-channel interference and ensure optimal performance for all clients and applications. The biggest limitation of ARM in the past was that it would not negotiate channel width (20, 40, 80MHz), leaving a lot of unused channels in the higher-width frequencies unused. Another past quirk of ARM was that it would calculate about every 5 minutes. With calculations happening that frequently, it was possible for a microwave running in the break room (which creates interference on the 2.4GHz band) to completely change the channel assignment of a building.

To address these issues, Aruba has overhauled the ARM protocol in 8.0 – and it even comes with a new name: AirMatch. AirMatch was designed with the modern RF environment in mind. It is tuned for noisy and high density environments, as well as areas where free air space is scarce. (Remember, we only have so many channels available over Radio Frequency; and the FCC has reserved their fair share for the government and emergency services, leaving businesses even fewer available channels).

AirMatch gathers RF statistics for the past 24 hours and proactively optimizes the network for the next day. With the automated channel, channel widths and transmit power optimization, AirMatch ensures even channel use, assists in interface mitigation and maximizes system capacity.

Zero Touch Provisioning (ZTP):

ZTP automates the deployment of APs and managed devices. Plug-n-play allows for fast and easy deployment and simplified operations, reduces costs and limits provisioning errors. ZTP was introduced in 70xx Mobility Controllers; and now in AurbaOS 8, we are extending the capability to include 72xx Mobility Controllers. The Mobility Controller receives its local configuration, global configuration and license limits from the master controller or the Mobility Master and provisions itself automatically.

Simplified Operation:

In contrast to ArubaOS 6, which operates on a flat configuration model containing global and local configuration, ArubaOS 8 uses a centralized, multi-tier architecture under a new UI that provides a clear separation between management, control and forwarding functions. The entire configuration for both the Mobility Master and managed devices is configured from a centralized location- providing better visibility and monitoring as well as simplifying and streamlining the configuration process and minimizing repetition.

Centralized Licensing with Pools

IT teams can manage all their licenses from a centralized location with centralized licensing, either from the Mobility Master or the master controller. In the new AOS 8, we have extended ability to include centralized licensing with Pools. For some customers who have separate funding for different groups inside their corporation, they have the option of simply assigning licenses for each group to manage and consume themselves. This will drastically simplify licensing, especially troubleshooting licensing issues!

To summarize, AOS 8.0 is the future platform for all Aruba development. Great amounts of time have been spent re-writing everything to ensure performance and scalability going forward – this project was started before the HPE acquisition took place! Instead of bolting-on updates to the aging 6.0 platform like most vendors tend to do, Aruba has made a huge investment in the future by re-writing the underlying Operating System. This shows their commitment to being the #1 vendor in the wireless space for years to come.

For a better understanding of what upgrades were made in 8.0, download this tech brief.

[qodef_button size=”small” type=”default” text=”Download” custom_class=”” icon_pack=”font_awesome” fa_icon=”” link=”http://www.arubanetworks.com/assets/ds/DS_ArubaOS8.pdf” target=”_self” color=”#ffffff” hover_color=”#004f95″ background_color=”#30c7ff” hover_background_color=”#ffffff” border_color=”#30c7ff” hover_border_color=”#004f95″ font_size=”” font_weight=”” margin=””]

There were far too many features and platform advancements for me to cover in a single blog (especially changes made to the deployment models), but you would like to have a discussion about these changes, feel free to Contact Zunesis.

In this post, I want to take a look at Aruba’s latest addition to their switching portfolio – the 2930m.

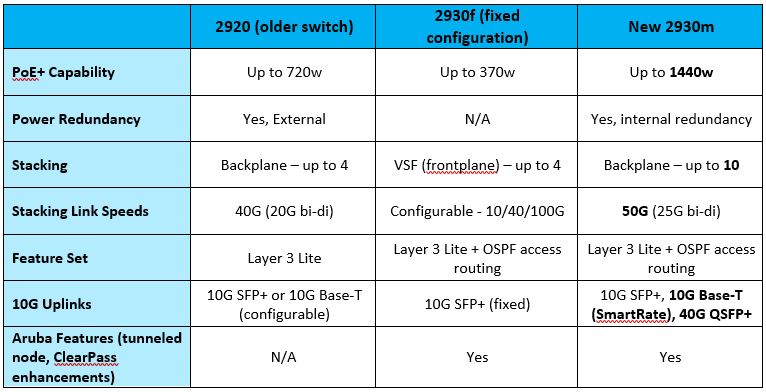

The 2930m is the modular brother to the 2930f (fixed) switch, which been selling for a number of months. The 2930m is the long-awaited replacement for the older 2920 switch, one of the best-selling switches ever for HPE networking, and includes some configurable options that are not available on the 2930f.

The new 2930m has configurable redundant power supplies, modular backplane stacking, higher stacking density, extremely high PoE capabilities, and a more advanced (but still not complete) layer 3 feature set for the modern edge network. The uplinks are also configurable on the 2930m, allowing for 1x 40G QSFP+ port, 4x 10G SFP+ ports, or 4x 10G SmartRate ports (1, 2.5, 5, or 10G copper ports depending on the capabilities of the device plugged into each port).

In addition to the data sheet below, I wanted to supply you with some information for quick reference. The best way to depict this information will be in table format, so we can compare the older 2920, the fixed configuration 2930f, and the new 2930m:

For more detailed information on this new switch, please download the data sheet here.

Network Management Suites available today through HPE

Network management has been around since the dawn of networking. In the early days, companies like Cisco and Hewlett Packard Enterprise (HPE) would create their own proprietary management software to manage their switching products (i.e. Procurve Manager was originally an HPE-only tool until it was updated with limited 3rd party support).

This all changed in the early 2000’s when a protocol called SNMP (Simple Network Management Protocol) was standardized and introduced to switches. This allowed the management plane of the switch to communicate live statistics such as response times, CPU utilization, etc., back to a centralized network management solution in an open-standards manner. It also allowed network admins to have a “single pane of glass” into the basic statistics of their multi-vendor network. SNMP has gone through various revisions to this day but the basic principle remains the same – allowing network admins to manage a multivendor network environment from one tool.

How They’re the Same:

Airwave and IMC both utilize SNMP to communicate with 3rd party devices, and both have an extensive list in the thousands of devices that are supported from 3rd party vendors from Cisco to Netgear.

How They’re Different:

The product differentiation comes down to how these tools will be utilized by the Network Admins:

Airwave

Airwave is directed more at “campus” environments (think carpeted office space, K-12, higher ed campuses, etc.) due to how easy it is to use compared to the more daunting setup involved in IMC. Another reason for this positioning is because Airwave is a much more capable wireless management tool, giving customers much better insight into the health of their wireless network then IMC can provide.

An example of this would be the VisualRF plugin in Airwave. VisualRF provides a real-time view of their RF coverage and client positioning. This visual tool allows network engineers to see their actual RF coverage inside of a building, giving them a good idea of any existing gaps in coverage that they might need to add an additional AP to support. Airwave is licensed per device on the network, and those licenses give you access to the full software suite.

IMC

Intelligent Management Center, or IMC, is committed to being a true “nuts and bolts” engineer tool, even allowing access to IMC’s APIs, giving customers the freedom to program their own modules within the platform. For this reason, HPE has started positioning this as more of a Datacenter focused “NOC” (networks operation center) tool.

Administrators can get much more in depth with the types of SNMP traps, alarms/alerting, and even the types of information that can be reported on with the tool. The initial setup is much more intensive than an Airwave deployment, and the interface is much less user friendly than Airwave, However, you can get extremely detailed real-time information out of the IMC platform – especially when it’s monitoring Aruba (ProCurve) or Comware switches. An example of this would be the QoS Manager plugin, which gives network administrators the ability to define a new global policy or make changes to an existing QoS policy and push those changes out to the network.

Currently, the only wireless management that IMC supports is for the legacy HPE wireless solutions MSM and Unified Wireless, but an “Airwave plugin” is in the works to bridge the gap to include Aruba wireless deployments. IMC is sold as modular software – the base platform is very capable; but to get some specific functionality, such as the QoS Management, you need to license the module. IMC is also licensed by device count in the base platform; however, some of the modules have different licensing schemes.

HPE has committed to continued product development on both platforms. As of right now, there are no early warning signs of one product cannibalizing the other. Choosing which product is rightyou’re your environment really depends on what you are hoping to get out of the platform. If you’re looking for something that’s easy to use with awesome built-in reporting, look into Airwave. If, on the other hand, you need an extremely customizable tool that can report on virtually any network statistic under the sun, IMC is your ticket. If you’re not sure which is the better fit for your organization, we are happy to sit down, discuss your needs, and dive deeper into the platforms in order to make the appropriate recommendation.

Composable

Have you heard the term “composable infrastructure” and aren’t really sure what it is? You’re not alone. There’s a lot of confusion out there about infrastructures of all types, from converged to hyper converged and now composable, so we understand the confusion. First, let’s talk about three types of infrastructures to give you a bit more background:

- Converged Infrastructure. This is a hardware-focused, static (aka it doesn’t change) infrastructure that supports both private and cloud environments.

- Hyper Converged Infrastructure (HCI). In an HCI environment, the infrastructure is software-defined instead of hardware-focused like a converged infrastructure. All of the various technologies (compute, storage, networking, and virtualization) are integrated together into a hardware box from a single vendor.

- Composable Infrastructure. Here, nothing is static. It consists of compute, fabric, and storage modules that you can access and use as you need them.

The main differentiator for a composable infrastructure, specifically one from Hewlett Packard Enterprise, is that it’s completely programmable and software-defined. This means that you can access your composable infrastructure to configure and reconfigure all of the resources—compute, fabric, and storage—for whatever your particular workflow needs are at that moment. Composable infrastructure is hallmarked by these three differentiators:

- Fluid Pool of Resources. Your compute, storage, and fabric resources are pooled together and provisioned to use at will.

- Software-Defined Intelligence. This is where you define the resources you need and manage the resource lifecycle.

- Unified API. The HPE OneView is a programmable interface that you use to set everything up through just one line of code.

Synergy

For composable infrastructure, we work with HPE Synergy. Maybe you’ve heard of that, too, and aren’t sure what it’s all about? Let’s dig in.

Consider this: You need to test something, and in order to do that, you need resources. So, to deploy those necessary resources, you would go to the Synergy template and request the exact resources you need in the form of an infrastructure. Synergy then quickly gets to work to compose the exact infrastructure you need from the pool of resources. When you’re done with what you’re working on, you then go back into Synergy and release those resources back into the pool for others to use.

Now that you know more about it, contact us to learn how we can help you make the move to composable infrastructure.

Deploying an enterprise wireless solution can be a challenging task,

especially for IT administrators tasked with supporting multiple sites. In order to ensure good performance, network administrators would often have to perform a site survey at each location to discover areas of RF coverage and interference, and then manually configure each AP according to the results of this survey.

Static site surveys can help you choose channel and power assignments for AP’s. However, these surveys are often time-consuming and expensive, and they only reflect the state of the network at a single point in time. RF environments are always changing, and new sources of interference pop up all the time – whether it’s the microwave in the break room that only gets used around lunch time or a new tenant down the hall who put in a new access point. If your wireless solution is not able to adapt to these changes on the fly, then users will have a poor experience. This means your IT department will experience higher call volumes.

Aruba Networks has developed a feature within their operating system to address these ever-changing wireless environments: Adaptive Radio Management (ARM).

ARM maximizes WLAN performance even in the highest traffic networks by dynamically and intelligently choosing the best 802.11 wireless channel and transmit power for each Aruba Access Point in its current RF environment. ARM solves wireless networking challenges such as large deployments, dense deployments, and installations that must support VoIP or mobile users.

Deployments with dozens of users per access point can cause network contention and interference, but ARM dynamically monitors and adjusts the network to ensure that all users are allowed ready access. ARM provides the best voice call quality with voice-aware spectrum scanning and call admission control.

Deployments with dozens of users per access point can cause network contention and interference, but ARM dynamically monitors and adjusts the network to ensure that all users are allowed ready access. ARM provides the best voice call quality with voice-aware spectrum scanning and call admission control.

When ARM is enabled it will continuously scan the air on all 802.11 channels and report back what it sees to the controller. You can retrieve this information from the controller or push the data to Airwave to get a quick health check of your WLAN deployment. You can do all of this without having to walk around every part of a building with a network analyzer tool.

In addition to all this, there are some exciting changes coming to the ARM technology and the Aruba Operating System in general with the 8.0 update, make sure you engage your local Aruba resources for more information.

Beyond The Role of Reseller and Implementer

In the past 10 years, the evolution of technology has continued to accelerate every year at a dizzying pace. It is now more challenging than ever before for IT leaders to develop and implement technology strategies for their organizations without the help of outside resources.

There are simply too many variables and solution options; and the speed with which technologies change can create confusion, delayed decision making, and, consequently, cause delays for important projects. Utilization of outside resources to assist with implementation has been at the core of services offered by Zunesis for over 12 years. But, during this time, we have also provided many of our customers formal, vendor-neutral Assessment and Strategy Development consulting services.

If you are responsible for managing IT architecture direction for your company, you have far more variables to consider than at any time before. Here are a few of the variables you need to factor into your planning:

Virtualization

- What Hypervisor will you use?

- Will you begin utilizing Converged solutions?

- Will you virtualize all workloads? How will you decide?

Storage

- What type of storage will you deploy? Locally attached, centralized/shared storage using Fibre Channel and iSCSI?

- Will you use All Flash or Hybrid?

- Will you separate storage by workload?

- Will you utilize cloud storage?

- Will you provide storage through Converged or Hyper Converged solutions?

- How will you protect your storage infrastructure?

- How do you ensure a storage infrastructure that will meet performance demands? How will that scale?

Networking

- Will you maintain a physical network infrastructure or begin utilizing some form of software defined networking solution?

- How will you provide security across your network?

Availability

- How will you provide availability for your virtualized infrastructure?

- How will you protect your data?

- Are there SLA’s that need to be met? Are these well-defined? Do you know the RPO/RTO requirements for your organization?

- Do you provide a separate protection strategy for your data and virtual machines?

Cloud

- How does Cloud fit into your strategy?

- What workloads will be appropriate in the cloud?

- How will you migrate workloads to the Cloud?

- Will cloud be part of your disaster recovery strategy?

The questions listed above are very familiar to today’s IT leader. And, in fact, they represent only part of all that needs to be considered when developing your IT infrastructure strategies. These questions, and the path to getting them answered, is also familiar to your technical team at Zunesis. As a Solution Provider and Value Added Reseller for over 12 years, we’ve seen the industry through many transformations. And with Account Managers and Technical personnel having decades of experience, Zunesis has been involved with every aspect of IT Infrastructure design, implementation, and support.

The questions listed above are very familiar to today’s IT leader. And, in fact, they represent only part of all that needs to be considered when developing your IT infrastructure strategies. These questions, and the path to getting them answered, is also familiar to your technical team at Zunesis. As a Solution Provider and Value Added Reseller for over 12 years, we’ve seen the industry through many transformations. And with Account Managers and Technical personnel having decades of experience, Zunesis has been involved with every aspect of IT Infrastructure design, implementation, and support.

But beyond the technical expertise, Zunesis makes it our mission to be an advocate for our customer, apart from representing the manufacturer and software vendor solutions we sell. It is our level of experience and our Customer First belief that qualifies us to help our clients define their IT strategies, not just act as a reseller and implementer.

While many of the challenges faced by you as an IT leader are common, we know that you have unique requirements when it comes to how we can best help you meet those challenges. Over the years our independent consulting services have taken many forms. Here are just a few of the types of consulting services we have delivered:

- Detailed documentation of existing IT Infrastructure. This documentation is often used to begin the process of evaluating future strategy.

- Performance assessments for storage, networking, and compute. In addition to documentation of the performance data, these assessments include infrastructure documentation and recommendations for improvement if performance problems are identified.

- IT Infrastructure Assessments. These are our most comprehensive offering and help define where you are, where you want to go, and recommendations for getting there. Our assessments include:

- Infrastructure documentation

- Documentation of goals/objectives

- Stakeholder discovery sessions

- Recommendations

- Implementation Roadmaps

- Backup and Disaster Recovery planning

- Request for Proposal and Request for Quote development

- Data Classification Assessments

The services listed above don’t replace the role of an IT leader in an organization, rather, they are designed to help organize the information required for you to make better informed decisions. Bringing in an outside resource, with experience across many technologies, is your way to enhance your own process and help you successfully define and implement your IT strategies.

Helping Organizations Maximize Their Investments to Drive Optimal Performance

Organizations need to do four things well in order to survive and thrive in the idea economy.

These are four areas our customers have told us are impacting their business the most as they transform to a digital enterprise. Hewlett Packard Enterprise (HPE) and Zunesis are helping organizations develop in these areas in order to maximize their investments to drive optimal performance.

Transform to a hybrid infrastructure: Accelerate the delivery of apps and services to your enterprise with the right mix of traditional IT, private, and public cloud. This is about having the right infrastructure optimized for each of your applications whether in your traditional data center or in a public, private, or managed cloud. It all has to work together seamlessly. In order to create and deliver new value instantly and continuously, businesses need infrastructure that can be composed and re-composed to meet shifting demands, infrastructure that will allow organizations to adjust when the inevitable disruption arrives. Your infrastructure has to be everywhere, at the right cost, at the right performance, with the right management, at the right scale. A hybrid infrastructure, one that combines public cloud, private cloud, and traditional IT can maximize performance, allowing for continuous delivery, improved efficiency, and optimized costs.

Protect the digital enterprise: Protect your most prized digital assets whether they are on premise, in the cloud, or in between. This is about security and risk management in the digital world. IT security used to be about defending the perimeter from external threats. The transformation of enterprise IT has created a matrix of widely distributed interactions between people, applications, and data on and off premise, on mobile devices and in the cloud. Security threats can be external or internal in nature and can represent malicious or unintentional actions. Enterprises lack the skills, resources, and expertise required to proactively manage this threat. Enterprises must adhere to complex regulatory, compliance, and data protection issues and ensure enterprise-wide resiliency & business continuity in the face of natural and cyber disasters. All of this requires new thinking and security strategies that enable new ways to do business.

Empower the data-driven organization: Harness 100% of your relevant data to empower people with actionable insights that drive superior business outcomes. This is about how companies harness all relevant business, human, and machine data to drive innovation, growth and competitive advantage. This means empowering stakeholders to make timely and targeted decisions based on actionable, data-derived insights. A data-driven organization strives to rapidly discover the value of its data through an optimized data-centric infrastructure. This foundation understands and engages with customers by listening and interpreting patterns within customer data, uncovers competitive advantages and new market opportunities, and uses data to streamline operations and enable a leaner and faster organization.

Enable workplace productivity: Deliver experiences that empower employees and customers to achieve better outcomes. The workplace is now digital, with interactions and experiences delivered to employees and customers across a multiplicity of locations, time, and devices. Enterprises must deliver rich digital and mobile experiences to customers, employees and partners, in order to engage employees and improve customer experience. Users expect personal, contextual and secure experiences. As enterprises look to improve productivity and drive customer loyalty, they must deliver highly engaging employee experiences and better serve customers through seamless, personal, contextual experiences.

Success in these four areas requires a partner able to bring all these elements together, aligned to your industry and your enterprise. This is the heart of what Hewlett Packard Enterprise and their trusted Platinum Partner Zunesis deliver. The process is unique for every organization, and the goal is about building business services to drive new products, services, business models, and experiences to compete in the idea economy. HPE and Zunesis help customers deal with big IT challenges and major shifts in technology. To thrive in the idea economy, you have to pick a transformation partner with the vision and breadth to create the best possible future.

Over the last several months, Hewlett Packard Enterprise (HPE) has introduced new some technologies and, of course, new terminology to go along with them. We now have terms like “Composable Infrastructure” and the “Virtual Vending Machine” to understand. Here’s a quick overview of what these trendy new terms mean:

Composable Infrastructure

This is HPE-speak for one of their newest products: Synergy. Recognized as the infrastructure of the future, Composable Infrastructure is designed to run traditional workloads as efficiently as possible, while accelerating value creation for a new breed of applications that leverage mobility, Big Data, and cloud-native technologies. This is a new approach to traditional architecture is built to allow the IT organization to work with the speed and flexibility of the cloud in their own data center.

This is HPE-speak for one of their newest products: Synergy. Recognized as the infrastructure of the future, Composable Infrastructure is designed to run traditional workloads as efficiently as possible, while accelerating value creation for a new breed of applications that leverage mobility, Big Data, and cloud-native technologies. This is a new approach to traditional architecture is built to allow the IT organization to work with the speed and flexibility of the cloud in their own data center.

For more information, visit the official site here.

Virtual Vending Machine

Recently, HPE announced the new Hyper Converged 380. This is based on one of their most proven technologies, the ProLiant DL380 Gen9 platform; and it introduces the concept of a Virtual Vending Machine. The solution integrates compute, storage, and virtualization. It features simplified upgrades, increased uptime and service levels. The new software-defined intelligence layer provides advanced analytics and reduces the costs to start, scale, and protect. The HC380 puts you on a direct path to composability.

Recently, HPE announced the new Hyper Converged 380. This is based on one of their most proven technologies, the ProLiant DL380 Gen9 platform; and it introduces the concept of a Virtual Vending Machine. The solution integrates compute, storage, and virtualization. It features simplified upgrades, increased uptime and service levels. The new software-defined intelligence layer provides advanced analytics and reduces the costs to start, scale, and protect. The HC380 puts you on a direct path to composability.

Check out the site for more details.

Ask us for more information about either of these new technologies and how they can fit into your greater data center roadmap.