What in the world does “metathesiophobia” mean? Simply stated, this is the fear of change. The origin of the term is Greek, meta- meaning “change” and -phobos meaning “fear.” We all have fear of change on some level. It is and always evolving; and some of us, more than others, have inherited this trait through our genetics.

When it comes to changes in technology – which we all know are constant – we generally experience two conflicting emotions: we feel excitement at the prospect of something new while simultaneously experiencing a feeling of resistance. We naturally resist change because we fear what we don’t know. Over the years, the evolution of technology has fueled this fear. Although we’ve seen many grand technological advances, we’ve also witnessed too many technology flops to count. This leaves us asking questions about every new technology on the market: What if it does not work? What if this change brings down my network? The list could go on and on indefinitely.

Although it is natural, and even responsible, for IT staff to approach changes in their IT environment with caution, approaching it with fear is not necessary. Worrying about the “What ifs” in any circumstance may help avoid pitfalls, but results are only ever achieved once some level of risk is taken. The beautiful thing is that changing your IT environment doesn’t have to be scary; it can be exciting. It can be done with confidence.

Working with Value-Added Resellers (VARs), who in turn work with companies that have deep R&D budgets, can eliminate the fear associated with implementing new technologies. You can rest assured that the R&D has been done; in fact, manufactures like HP test each new product to the limit, fix the bugs they find, and release to the public once they’re fully confident in the final outcome.

Working with Value-Added Resellers (VARs), who in turn work with companies that have deep R&D budgets, can eliminate the fear associated with implementing new technologies. You can rest assured that the R&D has been done; in fact, manufactures like HP test each new product to the limit, fix the bugs they find, and release to the public once they’re fully confident in the final outcome.

Working with a good VAR like Zunesis allows you the opportunity to demo IT products before you buy them. This means that not only can you be confident that the product or solution works, generally speaking, but you can be confident it will work in your environment. It allows you to see the pros and cons for yourself before you make a purchase decision.

Let Zunesis remove fear from your life. I will be discussing this for my next few blogs. If you would like more information to help you be confident moving forward, let me know; and I will be glad to help.

By now, we have all heard that Microsoft is rolling out another new and improved version of its popular Windows operating system. The new version of Windows, named Windows 10, is set to be released on July 29, 2015. So what does the next generation of Windows offer enterprise and small-to-medium businesses, and is it worth it?

Windows 10 for Business

Win 10 offers protection from modern security treats and includes features like Windows Hello and Microsoft Passport, which, according to Microsoft, make it easier to adopt biometrics and multi-factor authentication using your face, fingerprint, or iris to unlock your device. These features provide a user-friendly way to move away from passwords.

Windows 10 also has built-in defenses to help protect your critical business information from leaks or theft, while separating corporate from personal files. Technologies like Secure Boot and Device Guard ensure you’re protected from power-on to power-off.

Microsoft claims that Windows 10 is familiar and better than ever. It has similarities to Windows 7, including the Start menu, as well as new features like Continuum and Microsoft Edge, which provide new, innovative productivity experiences. Windows Continuum functionality for mobile phones tailors the app experience across devices to transform a phone into a full-powered PC, TV, or even a Smart TV. Microsoft Edge is the all-new Windows 10 browser built to give you a better web experience. Edge allows you to write directly on webpages and share your mark-ups with others. Edge also allows you to read online articles free of distraction or use the reading list feature for saving your favorite reads for later access.

Windows 10 is also expected to drive the next wave of device innovation, powering devices that hang on the wall, team collaboration, and holographic interfaces that open up revolutionary new ways to create, learn, and visualize.

Clearly Microsoft has included some unique and interesting features into Windows 10. Discovering if the new operating system will be embraced by business users will not be known for some time; all we can do is wait for the release and hope for the best. If Windows 10 is not what we expect, be on the lookout for Microsoft to roll out Windows 11 sooner than later.

In my last installment of this “Why HPE ProLiant” series, we reviewed HP’s Insight Remote Support capabilities. With this article, we’ll take a deeper look at some of the value-added technology provided through the HPE iLO 4 ASIC.

As a solution provider, a common refrain from clients and prospects is that “a server is a server”; and they don’t see the rationale in paying more for a “brand-name” server such as an HPE ProLiant vs. a “white-box” server. Through learning about what IT professionals are looking for and what their challenges are, we find that the following are core concerns:

– Keep the systems up and running

– Maintain infrastructure while controlling costs

– Increase staff productivity

– Prevent system downtime

– Better control of assets

While “white-box” and similar low-cost/low-value server manufactures can provide a quick fix to providing clients with needed CPU and memory “horsepower,” often times there is little additional value to support the concerns listed above that is provided by these low-value solutions. In this post, I want to share with you just one of many HPE ProLiant server innovations that is provided with HPE ProLiant servers: Integrated Lights Out (iLO). [Advanced features provided with a one-time license fee]

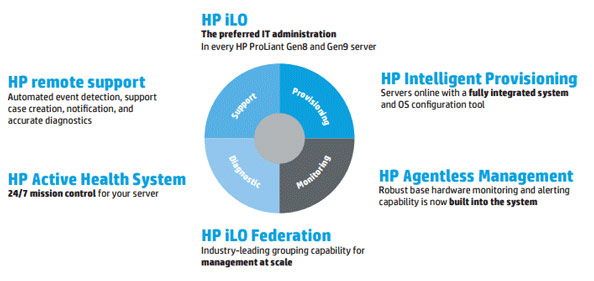

iLO provides the automated intelligence to maintain complete server control from any place, giving you control from a web browser or the iLO mobile app for smartphones. HPE ProLiant Gen 8 and 9 servers include a dedicated iLO 4 ASIC onboard which includes a 1GbE out-of-band network management port. iLO Management technologies contain these capabilities:

Provision: Rapid Discovery and Remote Access – inventory and deploy servers [with Advanced license] using web interface, smartphone app, CLI, or remote console.

Monitor: Active Health System and performance protection with advanced power and thermal control, agentless management monitors core hardware, provides alerting without adding cumbersome OS-level providers, and out-of-band management.

Optimize: Integrated Remote Console [most features with Advanced license] provides global team collaboration, video record/playback, and virtual media/KVM. iLO Federation permits management of groups of servers at scale. Enhanced security with Directory Service Authentication

Support: iLO provides core server instrumentation availability whether the OS is up or down. Download your Active Health System logs, and send to HP Support (or use Integrated Remote Support as covered in February’s article!) for faster problem identification.

What is this Advanced features license I keep mentioning? While many features of iLO are available with every ProLiant straight out of the box, some features indicated in this article require the iLO Advanced Pack. This is a one-time fee license (which includes 1 year of 24×7 HPE software support); and with most Zunesis HP ProLiant quotes, we include this license on your server quote unless otherwise requested to remove it. iLO Advanced Pack comes with the new HP OneView Management Suite license as well.

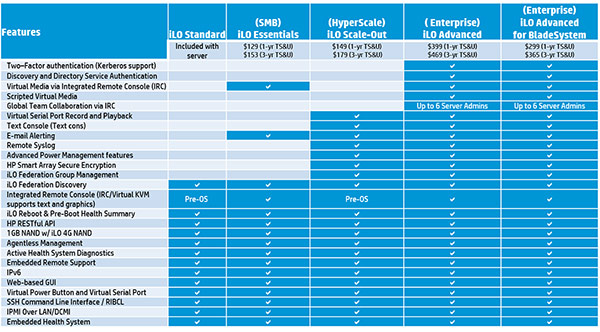

More information about what versions come with what functionality can be found in this chart below (Please note iLO Essentials only available on DL1xx series Gen 9 ProLiant):

A flood that damages mission-critical equipment is among the worst scenarios a data center can face, offering little hope for a quick fix. On April 13, 2015, the Zunesis team received a “first responders call” from a long-term public sector customer who had the misfortune to experience a broken water main that left their main data center under 2 feet of standing water.

The call for help came at 4 p.m. on a Thursday afternoon. Zunesis joined forces with HP and immediately responded to assess the damage. By the next morning, it was clear that the data center was living on borrowed time. With 18 racks of equipment compromised, it was imperative that the team immediately instigate a full Data center fail-over. Luckily, a secondary data center had just been completed, along with plans started for a full DR site; but those plans were stalled as the organization waited for additional funding to become available.

A two-fold plan was quickly put into place to swiftly expand the new data center to capacity while keeping the damaged data center up and running. The insurance company was immediately engaged to begin the replacement exercise while Zunesis and HP jumped in to “keep the lights on” in the existing data center. Immediately needed were a large supply of extra drives; power supplies; cables; switches; and, most daunting, an emergency 120TB SAN array to move data to safety.

By Friday morning, emails had been circulated to the highest levels of HPE and Zunesis requesting emergency assistance. Zunesis quickly moved a 3Par 7200 array from its own internal lab to the customer site and expanded an additional 100TB in an emergency drive order to migrate data. HP called in emergency supplies from all over the Americas. Extra drives, power supplies, cables, switches, and even servers arrived throughout the weekend. Within 48 hours, the teams had built up enough reserves to keep the data center live until all of the data could be migrated.

By Friday morning, emails had been circulated to the highest levels of HPE and Zunesis requesting emergency assistance. Zunesis quickly moved a 3Par 7200 array from its own internal lab to the customer site and expanded an additional 100TB in an emergency drive order to migrate data. HP called in emergency supplies from all over the Americas. Extra drives, power supplies, cables, switches, and even servers arrived throughout the weekend. Within 48 hours, the teams had built up enough reserves to keep the data center live until all of the data could be migrated.

Although all IT organizations know that it’s critical to have a solid DR plan and failover Data center in place, the reality is that the Data center spotlight is on monitoring and compliance; and the loss of a main data center is crippling. The second most common cause of catastrophic failures (after electrical) in the Data center is water leaks. Taking the following three steps can help avoid a flood disaster:

- Initial survey to ensure that the location of our data center is not in a flood plain and that the site is well protected from external water sources. Confirm that the fabric of the building is well enough designed and properly maintained to prevent the ingress of rain (even in extreme storm conditions). Check how sources of water inside the building are routed (hot and cold water storage tanks, pipe runs, waste pipes, WCs, as well as water-based fire suppression systems in the office space). Office space above a data center is almost always dangerous from a water ingress perspective.

- Protection to ensure that any water entering the data center does not have the opportunity to build up and cause a problem. If you are really worried, install drains and a sump pump under the plenum floor. Ensure that the floor space is sealed and that all cable routes through partition walls are stopped up to be air and water tight.

- Monitoring is critical in a data center – we absolutely need to be able to detect water under the plenum floor. Generally, water detection systems use a cable that runs under the floor and causes an alarm to be triggered if it comes into contact with water.

Click to find out more about our Disaster Recovery Assessments.

I talked about the critical importance of turning the 70/30 rule on its head in my last post, that the winners in your competitive set are the ones who are able to spend less time, money, and human resources maintaining their current IT environment and more of their resources using IT to create a competitive advantage. Companies that use IT to help out-invent, out-innovate, and be more customer-focused than their competitors will find themselves top of mind and top of heart for their customers.

One of the areas that is gathering great momentum in the IT industry is mobility. More and more businesses are realizing the benefits of employees and customers being able to access their own information from any device and from anywhere.

There are many benefits to mobility that are influencing this trend:

- Portability: A number of recent studies point to the fact that extending access to critical work applications to all employees leads to greater employee satisfaction and a general sense of enablement and empowerment.

- Availability: The ability to access content from anywhere leads to improved productivity and efficiency. It also leads to much greater responsiveness. From a revenue perspective, opportunities for customers to buy at any hour from any device can be a game changer for many businesses. On more than one occasion, access to my Kindle account on those 3AM over-caffeinated nights has certainly been good news for any Amazon stock holder.

- Power Savings: While the momentum is building for the truly mobile workforce, initial estimates point to a potential for up to 44% power savings through virtualization and BYOD initiatives

- Personal Ownership of Devices: Can be a real difference maker for businesses leading to reduced costs, higher adoption, and better employee engagement/higher employee compliance.

- Employee Satisfaction/Retention: Flexibility to work when and where they choose is fast becoming a part of the overall compensation package for many workers. Multiple studies point to a significant number of younger workers considering flexible work locations and schedules, social media access, and ability to use their own devices being as critical in an overall compensation package as is pay.

-

As with most things in life, there is a flip side. Along with the upsides of mobility, there comes a number of crucial challenges:

- Security

- At the same time that employees and consumers are asking for easier, quicker access to their work applications and account information, high profile security breaches point out how sophisticated hackers have become at taking advantage of even the tiniest cracks in security.

- Performance

- For most employees and customers, the only thing worse than not having access to applications and accounts is having access hampered by a poorly performing application.

- Cost Effectiveness

-

- Ensuring secure data access with multiple devices from multiple locations at all hours of the day (and night) introduces challenges. How do you create a secure, high-performing environment that doesn’t erode both the cost savings associated with employees and customers owning their own devices and the revenue opportunities of allowing customers to interact with you anytime they choose?

-

Choosing the right partner

How can you dial in on just the right mix of access, security, and cost effectiveness? The answer is working with a partner that can bring to the table the right mix of products, design expertise, and experience. Zunesis is a partner that can work with you to build a solution that spans virtualization, security, server, storage, and networking capabilities. Allow us to work with you on a design that can deliver a high-performing, secure IT environment that cost-effectively provides all the benefits of mobility to your employees and customers. Make the call today, and see the difference that empowered employees and enthusiastic customers can make for you.

In my last post I wrote about the importance of understanding your current environment before setting out on a search for new data storage solutions. Understanding your Usable Capacity requirements, Data Characteristics, and Workload Profiles is essential when evaluating the many storage options available. Once you have assessed and documented your requirements, you should spend some time understanding the many technologies being offered with today’s shared storage solutions.

Currently, one of the most talked about shared storage technologies is Flash storage. While Flash technology isn’t new, it is more prevalent now than ever before in shared storage solutions. To help you determine whether or not Flash storage is relevant for your environment, I wanted to touch on answers to some of the basic questions regarding this technology. What is Flash storage? What problem does it solve? What considerations are unique when considering shared Flash storage?

In simple terms, Flash Storage is an implementation of non-volatile memory used in Solid State Drives (SSD) or incorporated on to a PCIe card. Both of these implementations are designed as data storage alternatives to Hard Disk Drives (HDD/”spinning disk”). In most shared storage implementations, you’ll see SSD; and that’s what we’ll talk about today.

As you begin looking at Flash storage options you’ll see them defined by one of the following technologies:

SLC – Single Level Cell

MLC – Multi-level Cell

- eMLC – Enterprise Multi-level Cell

- cMLC – Consumer Multi-level Cell

There is a lot of information available on the internet to describe each of the SSD technologies in detail; so, for the purpose of this post, I’ll simply say that SLC is the most expensive of these while cMLC is the least expensive. The cost delta between the SSD technologies can be attributed to reliability and longevity. Given this statement, you might be inclined to disregard any of the MLC solutions for your business critical environment and stick with the solutions that use only SLC. In the past this may have been the right choice; however, the widespread implementation of Flash storage in recent years has brought about significantly improved reliability of MLC. Consequently, you’ll see eMLC and cMLC implemented in many of the Flash storage solutions available today.

Beyond the cell technology, there are three primary implementations of SSD used by storage manufacturers for their array solutions. Those implementations are:

- All Flash – As you might have guessed, this implementation uses only SSD, without the possibility of including an HDD tier.

- Flash + HDD – These solutions use a tier of SSD and usually provide a capacity tier made up of Nearline HDD. These solutions often provide automated tiering to migrate data between the two storage tiers.

- Hybrid – These solutions offer the choice of including SSD along with HDD and can also offer the choice of whether or not to implement automated tiering.

So why consider SSD at all for your shared storage array? Because SSD has no moving parts, replacing HDD with SSD can result in a reduction of power and cooling requirements, especially for shared storage arrays where there can be a large number of drives. However, the most touted advantage of SSD over HDD is speed. SSD is considered when HDD isn’t able to provide an adequate level of performance for certain applications. There are many variables that impact the actual performance gain of SSD over HDD, but it isn’t unrealistic to expect anywhere from 15 to 50 times the performance. So, as you look at the solution options available for storage arrays that incorporate SSD, keep in mind that your primary reason for utilizing Flash is to achieve better performance of one or more workloads.

So why consider SSD at all for your shared storage array? Because SSD has no moving parts, replacing HDD with SSD can result in a reduction of power and cooling requirements, especially for shared storage arrays where there can be a large number of drives. However, the most touted advantage of SSD over HDD is speed. SSD is considered when HDD isn’t able to provide an adequate level of performance for certain applications. There are many variables that impact the actual performance gain of SSD over HDD, but it isn’t unrealistic to expect anywhere from 15 to 50 times the performance. So, as you look at the solution options available for storage arrays that incorporate SSD, keep in mind that your primary reason for utilizing Flash is to achieve better performance of one or more workloads.

Historically, we have tried to meet performance demands of high I/O workloads by using large numbers of HDD; the more spinning disks you have reading and writing data, the better your response time will be. However, to achieve adequate performance in this way, we often ended up with far more capacity than required. When SSD first started showing up for enterprise storage solutions, we had the means to meet performance requirements with fewer drives. However, the drives were so small (50GB, 100GB) that we needed to be very miserly with what data was placed on the SSD tier.

Today you’ll find a fairly wide range of capacity options, anywhere from 200GB to 1.92TB per SSD. Consequently, you won’t be challenged trying to meet the capacity requirements of your environment. Given this reality you may be tempted to simply default to an All Flash solution. But, because SSD solutions are still much more expensive than HDD, you want to make sure to match your unique workload requirements accordingly. For instance, it may not make sense for you to pay the SSD premium to support your user file shares; but you might want to consider SSD for certain database requirements or for VDI. This is where you’ll be thankful that you took the time to understand your capacity and workload requirements.

When trying to achieve better performance of your applications, don’t let the choice of SSD be your only consideration. Remember, resolving a bottleneck in one part of the I/O path may simply move the bottleneck somewhere else. Be sure you understand the limitations of the controllers, fibre channel switches, network switches, and HBA’s.

Finally, you’ll need to understand how manufacturers can differentiate their implementation of Flash technology. Do they employ Flash optimization? Is Deduplication, compaction, or thin provisioning part of the design? Manufacturers may use the same terminology to describe these features, but their implementation of the technology may be very different. I’ll cover some of these in my next blog post. In the meantime, you may want to review the 2015 DCIG Flash Memory Buyers Guide.

Strategic technology trends are defined as having potentially significant impact on organizations in the next three years. Here is a summary of a few trends according to Forbes; Gartner, Inc.; Computerworld; and other technology visionaries:

- Wearable Devices – Uses of wearable technology are influencing the fields of health and medicine, fitness, aging, education, gaming, and finance. Such devices include bracelets, smart watches, and Google glasses. Wearable technology markets are anticipated to exceed $6 billion by 2016.

- Cloud Computing – Gartner says cloud computing will become the bulk of new IT spend by 2016. Business drivers behind cloud initiatives include disaster recovery or backup, increased IT cost, new users or services, and increased IT complexity.

- Smart Machines – Smart machines include robots, self-driving cars, and other inventions that are able to make decisions and solve problems without human intervention. Forbes states that 60% of CEOs believe that smart machines are a “futurist fantasy” but will, nonetheless, have a meaningful impact on business.

- 3D Printing – 3D printing offers the ability to create solid physical models. The cost of 3D printing will decrease in the next three years, leading to rapid growth of the market for these machines. Industrial use will also continue its rapid expansion. Gartner highlights that expansion will be especially great in industrial, biomedical, and consumer applications, highlighting the extent to which this trend is real, proving that 3D printing is a viable and cost-effective way to reduce costs through improved designs, streamlined prototyping, and short-run manufacturing. Worldwide shipments of 3D printers are expected to double.

- New Wi-Fi Standards – Prepare for the next generation of Wi-Fi. First, the

emergence of the next wave pf 802.11ac and the second development of the 802.11ax standard. Wi-Fi hotspots are expected to be faster and more reliable. Wi-Fi alliance predicts that products based on a draft of the standard will likely reach markets by early 2016.

emergence of the next wave pf 802.11ac and the second development of the 802.11ax standard. Wi-Fi hotspots are expected to be faster and more reliable. Wi-Fi alliance predicts that products based on a draft of the standard will likely reach markets by early 2016. - Mind-Reading Machines – IBM predicts that by 2016 consumers will be able to control electronics by using brain power only. People will not need passwords. By 2016, consumers will have access to gadgets that read their minds, allowing them to call friends and move computer cursors.

- Mobile Devices – Mobile device sales will continue to soar, and we will see less of the standard desktop computer. Worldwide mobile device shipments are expected to reach 2.6 billion units by 2016. Tablet PCs will be the fastest growing category with a 35% growth rate followed by smartphones at 18%.

- Big Data – Big data refers to the exponential growth and availability of data, both structured and unstructured. The vision is that organizations will be able to take data from any source, harness relevant data, and analyze it to reduce cost, reduce time, develop new products, and make smarter business decisions.

Only time will tell which of these will materialize as well as to what extent. However, one thing is certain: technology is getting faster, smarter, and more mobile by the minute. Interacting with technology any place and any time has become the norm, and this trend will continue to have a greater and greater impact on all types of organizations. Look up: George Jetson might be your next employee.

Anyone who has ever worked with Microsoft’s Active Directory, either as an end user or administrator, has undoubtedly come across strangeness and unexplained occurrences. Active Directory serves many purposes: identity management, resource policy deployment, and user security management to name a few. Active Directory handles its extremely complex inter-workings in a very robust and flexible way. It is designed to resist outages and lost communication while continuing to provide services to users. While all of that is good from an availability standpoint, it also makes it easy to hide problems from its administrators.

Help Desk conversations about Active Directory can often be heard with the phrases, “I don’t know why that happened,” “That’s weird. I’ve never seen it do that before,” and “Oh well, it works now.” These conversations can lead to the realization that Active Directory isn’t totally healthy and could be performing better than it is currently. Something as simple as logging on to a workstation may generate multiple errors that aren’t visible to the end user except in the symptom of a log on delay.

The health of Active Directory can be affected in many ways. Changes to Active Directory throughout the years can add up to significant problems that seem to show up suddenly. Examples of these types of changes could be any of the following:

- Adding or removing domain controllers

- Upgrading domain controllers

- Adding or removing Exchange servers

- Adding or removing physical sites to your environment

- Extending the schema

- Unreliable communication between domain controllers

These changes, if done incorrectly, can cause multiple problems including log on issues,  replication failures, DNS misconfiguration, or GPO problems to name a few.

replication failures, DNS misconfiguration, or GPO problems to name a few.

Simple questions that you can ask yourself to determine if your Active Directory is currently not as healthy as it could be are as follows:

- Do your users complain of strange log on or authentication issues?

- Does it take an abnormally long time for users to log on to their workstations?

- Do your GPOs work sometimes and not other times?

- Do you get strange references to old domain controllers or Exchange servers that have long since been removed?

- Do you have issues resolving server’s names through DNS?

- Do your DNS servers get out of sync?

- Do DNS entries mysteriously disappear?

- And maybe most importantly, have you ever employed an admin that was given full rights to Active Directory who you later learned was not qualified?

Active Directory is integral to the IT success of just about every company. Finding issues and correcting them before they become a problem can prevent outages and future losses in revenue. Whether you are currently experiencing noticeable issues or just want a “feel good” report on the current status of your Active Directory, Zunesis can provide that peace of mind. With over 15 years supporting Microsoft Active Directory services for our customers, we have the experience and skills to get your Active Directory to a healthy state. Our proven method of using various tools to extract Active Directory information, analyze that data, and prepare and deliver a detailed report has proven very successful. Contact Zunesis today to set up an appointment to talk about your Active Directory needs.

The ability to form closer customer relationships, stay at the forefront of market trends, and create competitive differentiation comes at a critical time for marketing organizations across all industries. In today’s competitive world, there are too many companies competing in an environment where there are not enough customers. This is especially true for high-tech and information technology companies where technology advancement occurs at a rapid pace. Now, more than ever, marketing organizations must create clear differentiation by tapping into new streams of customer, market, and competitive data made available from external sources like the public web. We’ve proven that when this is done with some discipline, exceptional results can be achieved.

We’ve seen how a large marketing organization’s ability to discover, synthesize, and act upon public web data can have a direct and tangible impact on their marketing strategy. I’ve had the good fortune to work with HP and other large brands in my career, and they all grapple with the same issue: they are drowning in a sea of data, all made possible by the public web and social media. I’ve realized that in order to see tangible results from social media listening (read: glean actionable insight), technology alone only gets you so far. When combining technology with human analysis, however, quantifiable value can be realized.

The problem with using social media listening technology as a standalone solution is its inability to automatically provide the in-depth analysis that a human is capable of providing. For example, we worked with a major sports team who wanted to increase social media engagement amongst women. A listening platform may provide data about what content women are engaging online, but it will not offer the strategy needed to glean more engagement. Human analysis needs to be conducted in order to understand and digest certain demographic data trends, interpret the underlying reason for engagement, and develop specific social media strategies that will better target and engage female audiences. In other words, the platform only gets you so far. The interpretation and analysis that takes a market research approach to understanding the data combined with the tool (technology) is necessary to glean insight.

We often forget that business, like life, is merely a series of decisions. The success of a company can be directly related to the worth of the decisions made by its employees; this is particularly true for marketing teams. By combining social media listening technology with human analysis, it is possible to take a complex data set now available online and categorize and synthesize it to identify key themes, influential voices, and conversation share. Companies now have access to a vast data set provided by the public web. The opportunity, then, is to convert this data into actionable insight that is directly linked to marketing processes to drive business outcomes.

This is a blog about a journey: a journey from being the customer of an IT Solutions Provider to servicing the customer. A journey about taking my perceived thoughts and ideas about the way I should have been treated as a customer and turning them into an action plan or template for the way I now treat my customers.

Background:

I am a college graduate with a Bachelor’s degree in Computer Science. In my 20 years in the IT industry, I have had a number of different titles – End User Support Specialist, Network Administrator, Sr. Systems Engineer, IT Manager – all with 3 companies. I did a 5 year stint with Company A in the Legal Profession in Ohio, and I served 15 years with Company B in the Financial Sector in Las Vegas.

Over the years, I have always wondered what it would be like to work for a vendor. I was curious about the ability to engage a wider range of technology, more in depth than the “in-house” IT professional usually experiences. In fact, I had multiple offers over the years; but Company B was a great place to work, so I never left.

During the early parts of 2014, I felt things were getting stale with Company B; so I got serious about a career change. I had a great relationship with Zunesis as a customer, so I explored employment opportunities with them. There was an immediate need and fit. In a few short weeks, I signed on with Zunesis; and after a nice week and a half vacation in California with my family, I started a new career.

I had been the main contact for IT vendors for Company B. I have experienced all sorts of sales people:

I had been the main contact for IT vendors for Company B. I have experienced all sorts of sales people:

- The quiet type who let their products do the talking,

- The boisterous type who think wining and dining is the way to close a sale (which I never complained about),

- The “inside” sales guy who is constantly calling just because I had asked for a quote,

- The “BFF,” that is, as long as there is a pending sale,

- And, finally, the good ones who handle the relationship the way you ask them to and put the “Customer First.”

I have also experienced all types of engineers:

- The “Of course I will come to Vegas and help you, stay out all night playing blackjack, and fall asleep in the conference room the next day,” type (not kidding),

- The “I know everything about everything,” type,

- And the good ones who are thorough, concise, and do what they say they are going to do. Again, the ones who put the “Customer First.”

As a customer I have experienced several types of sales people and engineers; this experience will help shape the type of Solution Architect I’d like to be as well as the type of sales people with whom I will align. I also bring to Zunesis years of experience as a customer, someone who has walked miles in the customer’s shoes. I bring a refreshing perspective to Zunesis’ motto: “Customer First.”

Stay tuned for my next entry as I describe my experiences in my first six months at Zunesis.